As a follow-up to my last post (ZFS SLOG Performance Testing of SSDs including Intel P4800X to Samsung 850 Evo), I wanted to focus specifically on the Intel Optane devices in a slightly faster test machine. These are incredible devices, especially for $59 as of latest check – for the P1600X 118GB M.2 form factor. Hopefully you enjoy this quick review / benchmark.

What is ZFS & SLOG/ZIL

A one-sentence summary is as follows: ZFS is a highly advanced & adaptable file system with multiple features to enhance performance, including the SLOG/ZIL (Separate Log Device/ZFS Intent Log), which essentially functions as a write cache for synchronous writes. For a more detailed write-up, see Jim Salter’s ZFS sync/async ZIL/SLOG guide.

Now to jump right into the performance.

A different (faster) test machine

I popped the P1600X into an M.2 slot of a different machine I have here at home and was very surprised at how much faster it was than in the previous test box. I know the Xeon D series isn’t exactly known for speed but people always say speed doesn’t really matter for storage. I guess being in the Major Leagues with these Optane devices means that processor speed does in fact matter. The single-thread speed of the 2678v3 isn’t a lot higher than the D-1541 (same generation Xeon) but the multi-thread is ~40% faster.

Test machine specs:

- Somewhat generic 1U case with 4×3.5″ bays

- AsrockRack EPC612D8

- Intel Xeon E5-2678v3

- 2x32GB 2400 MHz (running at 2133 MHz due to v3)

- Consumer Samsung NVMe boot drive (256GB)

- 1x Intel D3-S4610 as ZFS test-pool

- 2x Samsung PM853T 480GB as boot mirror for Proxmox (not used)

- 1x Intel Optane DC P1600X 58GB in the first M.2 slot

- 1x Intel Optane DC P4800X in the 2nd PCIe slot (via x16 riser)

Machine info:

root@truenas-1u[~]# uname -a FreeBSD truenas-1u.home.fluffnet.net 13.1-RELEASE-p2 FreeBSD 13.1-RELEASE-p2 n245412-484f039b1d0 TRUENAS amd64 root@truenas-1u[~]# zfs -V zfs-2.1.6-1 zfs-kmod-v2022101100-zfs_2a0dae1b7 root@truenas-1u[~]# fio -v fio-3.28 root@truenas-1u[~]#

The test drives

Intel did provide the Optane devices but did not make any demands on what to write about or how to write it, nor did they review any of these posts before publishing.

Intel Optane DC P1600X 58GB M.2

Intel Optane DC P4800X 375GB AIC

This drive is “face down” when installed so here’s a picture of it on my desk in its shipping tray. The other side is populated with many memory chips.

TrueNAS diskinfo for Intel P1600X

I wanted to re-run this test in a machine where the M.2 slot wasn’t limited to x1 lane width. The results are far better, even for latency, which I wasn’t expecting. For 4k size, the latency is 13.6 microseconds per write. This calculates out to 71.8 kIOPS, which is great for this simple test (I believe it is QD=1). In the Xeon D-1541 machine with a x1 M.2 slot, the latencies were roughly double, and the throughput topped out at 374 MB/s. Quick comparison: spinning hard drives typically have sync write latencies in the 13 millisecond range, meaning the Optane gets writes committed 1000x faster than spinning hard drives.

root@truenas-1u[/mnt/test-pool]# diskinfo -wS /dev/nvd1

/dev/nvd1

4096 # sectorsize

58977157120 # mediasize in bytes (55G)

14398720 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPEK1A058GA # Disk descr.

PHOC209200Q5058A # Disk ident.

nvme1 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

4 kbytes: 13.6 usec/IO = 287.2 Mbytes/s

8 kbytes: 17.8 usec/IO = 439.8 Mbytes/s

16 kbytes: 26.6 usec/IO = 586.9 Mbytes/s

32 kbytes: 43.9 usec/IO = 711.4 Mbytes/s

64 kbytes: 79.4 usec/IO = 786.7 Mbytes/s

128 kbytes: 151.5 usec/IO = 825.1 Mbytes/s

256 kbytes: 280.8 usec/IO = 890.2 Mbytes/s

512 kbytes: 545.3 usec/IO = 917.0 Mbytes/s

1024 kbytes: 1064.4 usec/IO = 939.5 Mbytes/s

2048 kbytes: 2105.2 usec/IO = 950.0 Mbytes/s

4096 kbytes: 4199.2 usec/IO = 952.6 Mbytes/s

8192 kbytes: 8367.5 usec/IO = 956.1 Mbytes/s

TrueNAS diskinfo for Intel P4800X

The P4800X was also faster in the Xeon E5-2678v3 machine – with latencies 8-10% better and throughput up to 270MB/s faster. Makes me wonder what an even faster machine could do. The v3 stuff is getting quite old at this point, but still excellent value for homelab usage.

The 4k latency is 14.1 microseconds, which is curiously 0.5 us slower than the P1600X. Every other size was both quicker and faster.

root@truenas-1u[/mnt/test-pool]# diskinfo -wS /dev/nvd0

/dev/nvd0

4096 # sectorsize

375083606016 # mediasize in bytes (349G)

91573146 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1K375GA # Disk descr.

PHKS750500G2375AGN # Disk ident.

nvme0 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

4 kbytes: 14.1 usec/IO = 276.8 Mbytes/s

8 kbytes: 16.0 usec/IO = 489.3 Mbytes/s

16 kbytes: 21.2 usec/IO = 737.6 Mbytes/s

32 kbytes: 30.3 usec/IO = 1032.6 Mbytes/s

64 kbytes: 48.9 usec/IO = 1278.3 Mbytes/s

128 kbytes: 86.1 usec/IO = 1451.3 Mbytes/s

256 kbytes: 151.1 usec/IO = 1655.0 Mbytes/s

512 kbytes: 277.8 usec/IO = 1800.0 Mbytes/s

1024 kbytes: 536.0 usec/IO = 1865.6 Mbytes/s

2048 kbytes: 1048.3 usec/IO = 1907.9 Mbytes/s

4096 kbytes: 2070.8 usec/IO = 1931.6 Mbytes/s

8192 kbytes: 4120.3 usec/IO = 1941.6 Mbytes/s

fio command for performance testing

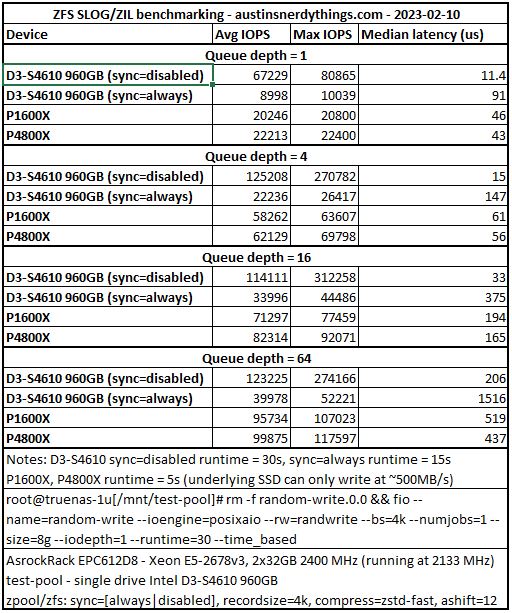

I varied runtime (between 5 and 30s – 30s for the fast tests is limited by the single disk write speed of ~500MB/s, so I reduced the time to the default txg commit time of 5s) and iodepth (1, 4, 16, 64).

fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=8g --iodepth=1 --runtime=30 --time_based

Results

I’m just going to copy + paste (and link to) the Excel table I created since there I don’t really have a good way to do tables in my current WordPress install.

Conclusion

These P1600X drives are very, very quick with their 4k write latencies in the low teens. With the recent price drops, and the increasing prevalence of M.2 slots in storage devices, adding one as a SLOG is a super-cheap method to drastically improve sync write performance. I am always on eBay trying to find used enterprise drives with high performance to price ratios and I think this one tops the charts (Amazon link: P1600X 118GB M.2). The write endurance is plenty high for many use cases at 1.3PB (ServeTheHome.com highlights that recycled data center SSDs rarely have more than 1PB written in their article Used enterprise SSDs: Dissecting our production SSD population). The fact that this M.2 doesn’t take a full drive bay makes it even more appealing for storage chassis with only 4 drive bays.

2 replies on “Intel Optane P1600X & P4800X as ZFS SLOG/ZIL”

The p1600x 118GB drive is down to $59 on Amazon now (July ’23). The biggest hamstring for Options seems to be throughput. Why not try an ASM2812 PCIe card (can be found for about $60) with 2 x M.2 slots on an X4 slot with a pair of 118GB p1600x drives in a soft raid 0 setup? Your iOPS shouldn’t suffer too much, but the throughput should be quite a bit better.

In a different environment for non-critical volumes, I’ve demonstrated considerable performance for MS Windows tiered storage using that setup as an SSD tier for a large effectively RAID – 5 array. The high iOPS allow you to batter it with accesses and the large size allows it to soak up the data well.

[…] As you can see, it is not quite complete. I still need to add the hard drive temp detection stuff to ramp case fans a bit. Those NVMe drives sure get hot (especially the P4800X I have in one of the PCIe slots – Intel Optane P1600X & P4800X as ZFS SLOG/ZIL). […]