I’ve been meaning to type this post up for a couple months, but with a 7 month old and a 29 month old at home, a new job, and a half-renovated basement, it took a bit of a back seat.

First question you may have:

What is ZFS?

ZFS stands for ‘zettabyte file system’. It has a long history that started in the early 2000s as part of Sun Microsystem’s Solaris operating system. Since then, it has undergone a decent glow-up, broke free of Oracle/Sun licensing via a rebranding as OpenZFS (I will not pretend to know half the details of how this happened) and is now a default option in at least two somewhat mainstream operating systems (Ubuntu 20.04+ and Proxmox). It just so happens that those are the two primary Linux-based operating systems I use. It also just so happens that I use ZFS as my main filesystem for all of my Proxmox hosts, as well as my network storage systems running FreeNAS/TrueNAS core.

Why do I use ZFS?

A huge benefit of ZFS is data integrity. Long story very short, each block written to disk is written with a checksum. That checksum is checked upon reading, and if it doesn’t match, ZFS knows that the data became corrupted somewhere between writing it to disk, and reading it back (could be an issue with disk, cables, disk controller, or even a flipped bit from a cosmic ray). Luckily, ZFS has a variety of options that make this a non-issue. For anything important, I do at least a mirror, meaning two disks with the same exact data written to each. If a checksum is invalid from one disk, the other is tried. If the other checks out, the first is corrected. ZFS also run a “scrub” at regular intervals (default is usually monthly), where it will read every single block in a pool and verify the checksums for integrity. This prevents bitrot (the tendency for data to go bad if left sitting for years). I will admit, none of my data is super super important but I like to pretend it is.

Performance of ZFS

ZFS can be extremely performant, if the right hardware is used. A trade-off of the excellent data integrity is the overhead involved with checksums and all that jazz. ZFS is also a copy-on-write filesystem, which means snapshots are instant, and blocks aren’t changed – just the reference to the block. Where things get slow is when you have a pool of spinning, mechanical hard drives, and you request sync writes, meaning that ZFS will not say the write is completed until it is safely committed to disk. That takes 8-15 milliseconds for spinning disks. 8-15 milliseconds may not seem like a long time, but it is an eternity compared to CPU L1/L2/L3 cache and a tenth (don’t quote me on that) of an eternity compared to fast NVMe SSDs. To overcome to latency of mechanical disk access, loads of people think, “Oh I can just slap in this old SSD from my last build as a read/write cache and call it good”. That’s what we’ll examine today.

Benchmarking ZFS sync writes

The test system is something I picked up from r/homelabsales a few months ago for $150 in a Datto case. I figured that compared to current Synology/QNAP 4-bay NAS devices, it was 1) a steal, and 2) far more capable:

- Intel Xeon D-1541 on a rebranded ASRock D1541D4U-2T8R

- 4x32GB DDR4 2400MHz

- 2x 10G from Intel X540

- LSI3008 SAS3 controller

- IPMI

- 4 bay 1U chassis

- 2x 4TB WD Red as the base mirror.

root@truenas-datto[~]# uname -a FreeBSD truenas-datto.home.fluffnet.net 13.1-RELEASE-p2 FreeBSD 13.1-RELEASE-p2 n245412-484f039b1d0 TRUENAS amd64 root@truenas-datto[~]# zfs -V zfs-2.1.6-1 zfs-kmod-v2022101100-zfs_2a0dae1b7

I stopped at 2x 4TB WD Reds instead of four so I could easily swap different SSDs into one of the other bays for benchmarking. I figure a basic 2 HDD mirror is decently close to many r/homelab setups. Of course, performance will roughly double for the base zpool if another mirror is added.

The guy on homelabsales had a bunch of similarly spec’d systems, all of which sold for very cheap – let me know if you got one!

The fio command used

fio is a standard benchmarking tool. I kept it simple and used the same script for each test. The following does random writes, with a 4k byte size to test IOPS instead of pure bandwidth/throughput, IO depth of 1, 30 second duration, and sync after each write.

Note: I set sync=always on the dataset, so I’m not specifying –fsync=1 here.

Note 2: iodepth=16 is a bit strong for home situations, but I’ve already redone this benchmark series twice so not doing it again with qd=1 for every drive. QD=1 is covered for the 3 fastest drives at the end

fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

I will be testing a few different drives as SLOG devices (“write cache”):

- Intel DC S3500 120GB

- Samsung 850 Evo 500GB

- HGST HUSSL4020ASS600 200GB

- HGST Ultrastar SS300 HUSMM3240ASS205 400GB

- Intel Optane P1600X M.2 form factor 58GB

- Intel Optane P4800X AIC (PCI-e form factor) 375GB

The Intel devices were graciously provided by Intel with no expectation other than running some benchmarks on them. Apparently their Optane business is winding down, which is unfortunate because it is an amazing technology. ServeTheHome.com has confirmed the P4800X AIC form-factor is being discontinued.

A short word on “write cache” for ZFS – go read read Jim Salter’s ZFS sync/async ZIL/SLOG guide. He is a ZFS expert and has written many immensely helpful guides on it. Long story short, a SLOG is a write cache device, but only for sync writes. It needs to have consistent, low latency to function well. Old enterprise SSDs are ideal for this.

FreeBSD’s diskinfo command results

I briefly wanted to show the diskinfo results for the Intel devices:

Intel Optane P4800X 375GB

Bottoms out at 15 microseconds per IO for 259.6 MB/s at 4k blocksize. This is extremely fast, and is considered to be one of the best SLOG devices currently available (top 5 easily). Make no mistake, the Intel P4800X is one of the highest performing solid state drives in existence. Here’s a quote from the StorageReview.com review of the device: “For low-latency workloads, there is currently nothing that comes close to the Intel Optane SSD DC P4800X.”

There are a few form factors available, with price tags to match:

root@truenas[~]# diskinfo -wS /dev/nvd0

/dev/nvd0

4096 # sectorsize

375083606016 # mediasize in bytes (349G)

91573146 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1K375GA # Disk descr.

PHKS750500G2375AGN # Disk ident.

nvme0 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

4 kbytes: 15.0 usec/IO = 259.6 Mbytes/s

8 kbytes: 18.3 usec/IO = 427.6 Mbytes/s

16 kbytes: 23.4 usec/IO = 667.7 Mbytes/s

32 kbytes: 34.6 usec/IO = 904.3 Mbytes/s

64 kbytes: 55.9 usec/IO = 1118.8 Mbytes/s

128 kbytes: 121.1 usec/IO = 1032.2 Mbytes/s

256 kbytes: 197.8 usec/IO = 1263.7 Mbytes/s

512 kbytes: 352.2 usec/IO = 1419.5 Mbytes/s

1024 kbytes: 651.0 usec/IO = 1536.2 Mbytes/s

2048 kbytes: 1237.5 usec/IO = 1616.1 Mbytes/s

4096 kbytes: 2413.5 usec/IO = 1657.4 Mbytes/s

8192 kbytes: 4772.1 usec/IO = 1676.4 Mbytes/s

Intel Optane P1600X 58GB diskinfo

The P4800X’s little brother is no slouch. In fact, it is quicker latency-wise than any SATA and SAS SSD currently in existence. The diskinfo shows that in a slightly more capable system (Xeon E5-2678v3, 2x32GB 2133 MHz, ASRock EPC612D8), the latency is even lower than in the P4800X. The latency is an astounding 13.3 microseconds per IO at 4k size. These are now available on Amazon (as a 118GB version) for $88 as of writing – Intel Optane P1600X 118GB M.2. Note: the original version of this post had the numbers from a P1600X in a M.2 slot limited to a single PCIe lane (x1). The below numbers are from a full x4 slot.

root@truenas[~]# diskinfo -wS /dev/nvd0

/dev/nvd0

512 # sectorsize

58977157120 # mediasize in bytes (55G)

115189760 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPEK1A058GA # Disk descr.

PHOC209200Q5058A # Disk ident.

nvme0 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 9.2 usec/IO = 52.9 Mbytes/s

1 kbytes: 9.3 usec/IO = 105.2 Mbytes/s

2 kbytes: 10.9 usec/IO = 179.6 Mbytes/s

4 kbytes: 13.3 usec/IO = 293.3 Mbytes/s

8 kbytes: 19.5 usec/IO = 400.3 Mbytes/s

16 kbytes: 35.2 usec/IO = 444.1 Mbytes/s

32 kbytes: 62.4 usec/IO = 500.6 Mbytes/s

64 kbytes: 116.9 usec/IO = 534.8 Mbytes/s

128 kbytes: 218.6 usec/IO = 571.7 Mbytes/s

256 kbytes: 413.4 usec/IO = 604.7 Mbytes/s

512 kbytes: 806.9 usec/IO = 619.6 Mbytes/s

1024 kbytes: 1570.5 usec/IO = 636.7 Mbytes/s

2048 kbytes: 3095.1 usec/IO = 646.2 Mbytes/s

4096 kbytes: 5889.7 usec/IO = 679.1 Mbytes/s

8192 kbytes: 12175.3 usec/IO = 657.1 Mbytes/s

On to the benchmarking results

The base topology of the zpool is a simple mirrored pair of WD Reds.

root@truenas-datto[~]# zpool create -o ashift=12 bench-pool mirror /dev/da1 /dev/da3 root@truenas-datto[~]# zfs set recordsize=4k bench-pool root@truenas-datto[~]# zfs set compress=lz4 bench-pool root@truenas-datto[~]# zfs set sync=always bench-pool

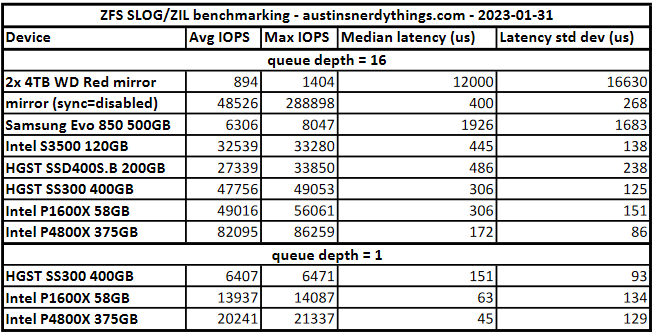

Base 2x 4TB WD Red Results

With sync write speeds: 894 IOPS, 3.6MB/s. It is 2023 – do not use uncached mechanical hard drives for sync workloads. Median completion latency of 12 milliseconds, average of 17.8 milliseconds.

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=5545KiB/s][w=1386 IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=4014: Mon Jan 30 11:13:18 2023

write: IOPS=894, BW=3578KiB/s (3664kB/s)(105MiB/30035msec); 0 zone resets

slat (nsec): min=1187, max=148381, avg=4123.14, stdev=4314.32

clat (msec): min=11, max=139, avg=17.85, stdev=16.63

lat (msec): min=11, max=139, avg=17.86, stdev=16.63

clat percentiles (msec):

| 1.00th=[ 12], 5.00th=[ 12], 10.00th=[ 12], 20.00th=[ 12],

| 30.00th=[ 12], 40.00th=[ 12], 50.00th=[ 12], 60.00th=[ 12],

| 70.00th=[ 12], 80.00th=[ 12], 90.00th=[ 49], 95.00th=[ 61],

| 99.00th=[ 73], 99.50th=[ 81], 99.90th=[ 120], 99.95th=[ 140],

| 99.99th=[ 140]

bw ( KiB/s): min= 871, max= 5619, per=100.00%, avg=3594.69, stdev=2066.03, samples=59

iops : min= 217, max= 1404, avg=898.29, stdev=516.49, samples=59

lat (msec) : 20=86.12%, 50=4.53%, 100=9.05%, 250=0.30%

cpu : usr=0.25%, sys=0.58%, ctx=2221, majf=0, minf=1

IO depths : 1=2.1%, 2=5.7%, 4=18.5%, 8=63.3%, 16=10.4%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=92.8%, 8=1.7%, 16=5.5%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,26864,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

With sync=disabled

This effectively tests how fast the pool can write without consideration for sync writes. They are essentially buffered in memory (in the ZIL – ZFS intent log) and flushed every 5 seconds by default to disk.

IOPS = 48.3k, median latency = 400 microseconds, avg latency = 321 microseconds. Note that the max IOPS recorded was 288.8k, which was likely for the first few seconds as the ZIL filled up. As the ZIL started to flush to disk, the writes slowed down to keep pace with the slowness of the disks writing. This figure is the maximum this system can generate, regardless of what disks are used.

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=137MiB/s][w=35.0k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5388: Mon Jan 30 11:57:29 2023

write: IOPS=48.3k, BW=189MiB/s (198MB/s)(5658MiB/30001msec); 0 zone resets

slat (nsec): min=981, max=176175, avg=2249.36, stdev=1835.18

clat (usec): min=10, max=1486, avg=319.71, stdev=267.96

lat (usec): min=12, max=1490, avg=321.96, stdev=267.89

clat percentiles (usec):

| 1.00th=[ 21], 5.00th=[ 25], 10.00th=[ 29], 20.00th=[ 37],

| 30.00th=[ 52], 40.00th=[ 68], 50.00th=[ 400], 60.00th=[ 461],

| 70.00th=[ 498], 80.00th=[ 578], 90.00th=[ 644], 95.00th=[ 693],

| 99.00th=[ 1004], 99.50th=[ 1090], 99.90th=[ 1172], 99.95th=[ 1205],

| 99.99th=[ 1270]

bw ( KiB/s): min=61860, max=1155592, per=100.00%, avg=194107.64, stdev=242822.10, samples=59

iops : min=15465, max=288898, avg=48526.51, stdev=60705.52, samples=59

lat (usec) : 20=0.59%, 50=29.12%, 100=12.99%, 250=2.63%, 500=25.14%

lat (usec) : 750=26.72%, 1000=1.74%

lat (msec) : 2=1.05%

cpu : usr=12.47%, sys=24.78%, ctx=671732, majf=0, minf=1

IO depths : 1=0.1%, 2=0.5%, 4=12.1%, 8=56.8%, 16=30.6%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=96.0%, 8=1.4%, 16=2.6%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1448382,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

With Samsung Evo 850 500GB

This was a very popular SSD a few years ago, with great performance per dollar when it was release. This was the 2nd SSD I ever bought (first was a Crucial M4 256GB).

IOPS = 6306, median latency = 1.9 milliseconds, avg latency = 2.5 ms, standard deviation = 1.6 ms. These are not great performance numbers. Consumer drives typically don’t deal well with high queue depth operations.

root@truenas-datto[~]# smartctl -a /dev/da0

Model Family: Samsung based SSDs

Device Model: Samsung SSD 850 EVO 500GB

root@truenas-datto[~]# zpool add bench-pool log /dev/da0

root@truenas-datto[~]# zpool status bench-pool

pool: bench-pool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

bench-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

da1 ONLINE 0 0 0

da3 ONLINE 0 0 0

logs

da0 ONLINE 0 0 0

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=9141KiB/s][w=2285 IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=4532: Mon Jan 30 11:21:33 2023

write: IOPS=6306, BW=24.6MiB/s (25.8MB/s)(739MiB/30005msec); 0 zone resets

slat (nsec): min=1088, max=250418, avg=4202.64, stdev=3509.42

clat (usec): min=1038, max=14358, avg=2509.48, stdev=1683.17

lat (usec): min=1044, max=14362, avg=2513.68, stdev=1683.00

clat percentiles (usec):

| 1.00th=[ 1418], 5.00th=[ 1713], 10.00th=[ 1762], 20.00th=[ 1811],

| 30.00th=[ 1860], 40.00th=[ 1893], 50.00th=[ 1926], 60.00th=[ 1975],

| 70.00th=[ 2008], 80.00th=[ 2073], 90.00th=[ 6259], 95.00th=[ 6849],

| 99.00th=[ 8848], 99.50th=[ 9634], 99.90th=[12518], 99.95th=[12780],

| 99.99th=[13173]

bw ( KiB/s): min= 8928, max=32191, per=100.00%, avg=25461.85, stdev=8650.57, samples=59

iops : min= 2232, max= 8047, avg=6365.05, stdev=2162.61, samples=59

lat (msec) : 2=67.18%, 4=20.48%, 10=12.06%, 20=0.28%

cpu : usr=2.26%, sys=5.10%, ctx=35707, majf=0, minf=1

IO depths : 1=0.1%, 2=0.3%, 4=6.6%, 8=75.7%, 16=17.4%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=91.3%, 8=4.8%, 16=3.9%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,189227,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

With a 10 year old Intel DC S3500 120GB

This was the first enterprise SSD I acquired for use in my homelab. I bought two, for a read/write cache on my Xpenology machine (DSM requires 2 drives to mirror for write cache). The datasheet indicates write latency of 65 us, which is pretty quick. Much faster than the 1900 us median latency of the Evo 850 in the previous section. Unfortunately for the 120GB drives, sequential writes are indicated at 135 MB/s, which is fine for gigabit filesharing. These drives (and many, many other “enterprise” SSDs) feature capacitors for power loss protection. The drive can acknowledge the writes very quick because the capacitors store enough energy for the drive to commit writes to NAND in the event of a power loss.

IOPS = 32.5k, median latency = 437 us, 5x faster than the Evo 850. Standard deviation = 0.14 ms, 10x more consistent than the Evo 850.

root@truenas-datto[~]# smartctl -a /dev/da0

Model Family: Intel 730 and DC S35x0/3610/3700 Series SSDs

Device Model: INTEL SSDSC2BB120G4

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=128MiB/s][w=32.9k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=4782: Mon Jan 30 11:26:32 2023

write: IOPS=32.5k, BW=127MiB/s (133MB/s)(3811MiB/30001msec); 0 zone resets

slat (nsec): min=1226, max=12095k, avg=3314.28, stdev=13914.23

clat (usec): min=156, max=17646, avg=468.20, stdev=137.34

lat (usec): min=214, max=17648, avg=471.52, stdev=138.30

clat percentiles (usec):

| 1.00th=[ 363], 5.00th=[ 388], 10.00th=[ 400], 20.00th=[ 416],

| 30.00th=[ 429], 40.00th=[ 437], 50.00th=[ 445], 60.00th=[ 457],

| 70.00th=[ 478], 80.00th=[ 506], 90.00th=[ 570], 95.00th=[ 611],

| 99.00th=[ 725], 99.50th=[ 807], 99.90th=[ 1057], 99.95th=[ 1254],

| 99.99th=[ 3032]

bw ( KiB/s): min=109752, max=133120, per=100.00%, avg=130157.39, stdev=4451.81, samples=59

iops : min=27438, max=33280, avg=32539.07, stdev=1112.98, samples=59

lat (usec) : 250=0.01%, 500=78.78%, 750=20.44%, 1000=0.64%

lat (msec) : 2=0.11%, 4=0.02%, 20=0.01%

cpu : usr=6.55%, sys=13.26%, ctx=186714, majf=0, minf=1

IO depths : 1=0.3%, 2=4.6%, 4=23.3%, 8=59.6%, 16=12.3%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=94.1%, 8=0.3%, 16=5.5%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,975522,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

With an 11 year old HGST SSD400S.B 200GB

This is a SAS2 SSD with SLC NAND, and power loss protection. It has a rated endurance of 18 petabytes. This is effectively infinite write endurance for any home use situation (and even many enterprise/commercial use situations).

IOPS = 27.4k, median latency = 486 us, standard dev = 238 us. Very good numbers.

root@truenas-datto[~]# smartctl -a /dev/da0

=== START OF INFORMATION SECTION ===

Vendor: HITACHI

Product: HUSSL402 CLAR200

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=107MiB/s][w=27.5k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5064: Mon Jan 30 11:31:09 2023

write: IOPS=27.4k, BW=107MiB/s (112MB/s)(3208MiB/30001msec); 0 zone resets

slat (nsec): min=1234, max=1589.4k, avg=3528.85, stdev=7290.11

clat (usec): min=159, max=17706, avg=559.45, stdev=238.22

lat (usec): min=207, max=17710, avg=562.98, stdev=238.44

clat percentiles (usec):

| 1.00th=[ 363], 5.00th=[ 396], 10.00th=[ 416], 20.00th=[ 445],

| 30.00th=[ 457], 40.00th=[ 469], 50.00th=[ 486], 60.00th=[ 519],

| 70.00th=[ 578], 80.00th=[ 652], 90.00th=[ 791], 95.00th=[ 930],

| 99.00th=[ 1172], 99.50th=[ 1287], 99.90th=[ 4146], 99.95th=[ 4359],

| 99.99th=[ 4948]

bw ( KiB/s): min=82868, max=135401, per=99.87%, avg=109358.92, stdev=15198.36, samples=59

iops : min=20717, max=33850, avg=27339.37, stdev=3799.66, samples=59

lat (usec) : 250=0.01%, 500=55.35%, 750=32.72%, 1000=8.72%

lat (msec) : 2=3.07%, 4=0.03%, 10=0.12%, 20=0.01%

cpu : usr=6.02%, sys=11.55%, ctx=158396, majf=0, minf=1

IO depths : 1=0.3%, 2=4.5%, 4=23.5%, 8=59.5%, 16=12.3%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=94.2%, 8=0.3%, 16=5.5%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,821298,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

HGST Ultrastar SS300 HUSMM3240 400GB

This is a more modern SAS3 MLC SSD, spec’d for write-intensive use cases. As far as I can tell, this drive is about as close to NVMe performance as you can get from a SAS3 interface per the datasheet specs (200k IOPS, 2050 MB/s throughput, 85 us latency max). Endurance is 10 drive writes per day for 5 years, which is 7.3 PB.

Our pool now does 43.0k IOPS (max 49.8k), with a median sync latency of 306 us, standard dev of 244 us

root@truenas-datto[~]# smartctl -a /dev/da0

=== START OF INFORMATION SECTION ===

Vendor: HGST

Product: HUSMM3240ASS205

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=107MiB/s][w=27.5k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5609: Mon Jan 30 12:01:13 2023

write: IOPS=43.0k, BW=168MiB/s (176MB/s)(5045MiB/30001msec); 0 zone resets

slat (nsec): min=1190, max=300805, avg=3316.20, stdev=6306.27

clat (usec): min=86, max=33197, avg=350.86, stdev=244.72

lat (usec): min=129, max=33199, avg=354.17, stdev=244.57

clat percentiles (usec):

| 1.00th=[ 212], 5.00th=[ 247], 10.00th=[ 265], 20.00th=[ 281],

| 30.00th=[ 289], 40.00th=[ 297], 50.00th=[ 306], 60.00th=[ 314],

| 70.00th=[ 330], 80.00th=[ 347], 90.00th=[ 379], 95.00th=[ 486],

| 99.00th=[ 1369], 99.50th=[ 1418], 99.90th=[ 1532], 99.95th=[ 1565],

| 99.99th=[ 1631]

bw ( KiB/s): min=106970, max=199121, per=100.00%, avg=173154.63, stdev=33688.29, samples=59

iops : min=26742, max=49780, avg=43288.37, stdev=8422.14, samples=59

lat (usec) : 100=0.01%, 250=5.86%, 500=89.35%, 750=0.62%, 1000=0.05%

lat (msec) : 2=4.11%, 4=0.01%, 10=0.01%, 20=0.01%, 50=0.01%

cpu : usr=7.38%, sys=17.49%, ctx=318098, majf=0, minf=1

IO depths : 1=0.3%, 2=3.9%, 4=21.5%, 8=61.2%, 16=13.1%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=93.9%, 8=0.8%, 16=5.3%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1291513,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Intel Optane DC P1600X 58GB

This is one of the drives sent to me by Intel. Optane is not NAND technology. I don’t really keep up on the details of persistent storage details, so you’ll have to look up specifics. What I do know, is the technology allows for much faster writes, in terms of latency. At the top end, the throughput isn’t as high as modern NVMe, but the latency is much quicker at low queue depths. As a reminder, all the tests so far are with queue depth = 16. At these queue depths, Optane doesn’t look as fast as it really is, unless you’re working with the P1600X’s big brother (next section).

IOPS = 39.2k (max 57.6k), median latency = 322 us, std dev = 385 us.

root@truenas-datto[~]# smartctl -a /dev/nvme1 | head -n 6

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p2 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Number: INTEL SSDPEK1A058GA

Serial Number: PHOC209200KC058A

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=98.7MiB/s][w=25.3k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5670: Mon Jan 30 12:03:22 2023

write: IOPS=39.2k, BW=153MiB/s (160MB/s)(4591MiB/30001msec); 0 zone resets

slat (nsec): min=1096, max=337336, avg=3261.19, stdev=6144.90

clat (usec): min=26, max=72627, avg=387.96, stdev=385.67

lat (usec): min=75, max=72631, avg=391.22, stdev=385.50

clat percentiles (usec):

| 1.00th=[ 163], 5.00th=[ 200], 10.00th=[ 221], 20.00th=[ 265],

| 30.00th=[ 289], 40.00th=[ 306], 50.00th=[ 322], 60.00th=[ 334],

| 70.00th=[ 351], 80.00th=[ 408], 90.00th=[ 619], 95.00th=[ 857],

| 99.00th=[ 1385], 99.50th=[ 1500], 99.90th=[ 2245], 99.95th=[ 2343],

| 99.99th=[ 2671]

bw ( KiB/s): min=51568, max=230682, per=100.00%, avg=157659.42, stdev=51886.29, samples=59

iops : min=12892, max=57670, avg=39414.47, stdev=12971.59, samples=59

lat (usec) : 50=0.01%, 100=0.04%, 250=16.53%, 500=67.34%, 750=10.13%

lat (usec) : 1000=1.55%

lat (msec) : 2=4.31%, 4=0.11%, 10=0.01%, 50=0.01%, 100=0.01%

cpu : usr=7.55%, sys=17.05%, ctx=310458, majf=0, minf=1

IO depths : 1=0.2%, 2=3.3%, 4=18.4%, 8=63.4%, 16=14.8%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=93.6%, 8=1.5%, 16=4.8%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1175375,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Intel Optane DC P4800X 375GB

This is the other drive that Intel sent me. This drive is fast. Many review sites use verbiage such as “this drive has no comparison” or “this is the fastest drive we’ve ever tests”.

My first test was for 30 seconds. I realized the drive was doing it’s thing (caching sync writes) much faster than the HDD mirror could flush to disk. Regardless, the initial results are below. Note the max IOPS of 84.6k.

root@truenas-datto[~]# smartctl -a /dev/nvme0

=== START OF INFORMATION SECTION ===

Model Number: INTEL SSDPED1K375GA

Serial Number: PHKS750500G2375AGN

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=30 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=105MiB/s][w=26.9k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5733: Mon Jan 30 12:05:04 2023

write: IOPS=38.9k, BW=152MiB/s (159MB/s)(4560MiB/30001msec); 0 zone resets

slat (nsec): min=1138, max=14924k, avg=4410.98, stdev=17210.79

clat (usec): min=15, max=38034, avg=389.12, stdev=465.14

lat (usec): min=52, max=38036, avg=393.54, stdev=464.47

clat percentiles (usec):

| 1.00th=[ 81], 5.00th=[ 110], 10.00th=[ 129], 20.00th=[ 149],

| 30.00th=[ 161], 40.00th=[ 172], 50.00th=[ 180], 60.00th=[ 190],

| 70.00th=[ 210], 80.00th=[ 465], 90.00th=[ 1221], 95.00th=[ 1287],

| 99.00th=[ 1467], 99.50th=[ 1549], 99.90th=[ 2343], 99.95th=[ 2409],

| 99.99th=[ 2737]

bw ( KiB/s): min=61716, max=338557, per=100.00%, avg=156442.86, stdev=111950.13, samples=59

iops : min=15429, max=84639, avg=39110.37, stdev=27987.58, samples=59

lat (usec) : 20=0.01%, 50=0.02%, 100=3.42%, 250=72.03%, 500=4.63%

lat (usec) : 750=0.26%, 1000=0.10%

lat (msec) : 2=19.20%, 4=0.35%, 10=0.01%, 20=0.01%, 50=0.01%

cpu : usr=8.83%, sys=17.06%, ctx=377621, majf=0, minf=1

IO depths : 1=0.2%, 2=2.4%, 4=15.2%, 8=66.6%, 16=15.7%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=93.0%, 8=2.6%, 16=4.4%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,1167247,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Now that we’re hitting the HDD mirror limits

Recall that the default txg flush interval is 5 seconds. I used a test of 10 seconds, thinking that the SLOG will cache writes for up to 5 seconds, and for the next 5 seconds, the disks are constantly writing the cache to disk while the SLOG is still ingesting writes.

HGST SS300 – 10 second test

IOPS = 47.7k (max = 49.0k), median latency = 306 us, std dev = 125 us

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=10 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=172MiB/s][w=44.0k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5798: Mon Jan 30 12:06:40 2023

write: IOPS=47.7k, BW=186MiB/s (195MB/s)(1864MiB/10001msec); 0 zone resets

slat (nsec): min=1069, max=2552.4k, avg=3405.36, stdev=7482.06

clat (usec): min=85, max=18461, avg=314.21, stdev=124.91

lat (usec): min=156, max=18465, avg=317.61, stdev=125.01

clat percentiles (usec):

| 1.00th=[ 225], 5.00th=[ 253], 10.00th=[ 273], 20.00th=[ 285],

| 30.00th=[ 289], 40.00th=[ 297], 50.00th=[ 306], 60.00th=[ 314],

| 70.00th=[ 326], 80.00th=[ 343], 90.00th=[ 363], 95.00th=[ 392],

| 99.00th=[ 486], 99.50th=[ 510], 99.90th=[ 586], 99.95th=[ 635],

| 99.99th=[ 1860]

bw ( KiB/s): min=167409, max=196215, per=100.00%, avg=191025.95, stdev=6820.67, samples=19

iops : min=41852, max=49053, avg=47756.05, stdev=1705.08, samples=19

lat (usec) : 100=0.01%, 250=4.23%, 500=95.12%, 750=0.62%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%

cpu : usr=7.83%, sys=19.74%, ctx=125318, majf=0, minf=1

IO depths : 1=0.2%, 2=4.0%, 4=22.3%, 8=60.6%, 16=12.9%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=94.1%, 8=0.5%, 16=5.4%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,477196,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Intel P1600X – 10 second test

IOPS = 49.0k (max = 56.0k), median latency = 306 us, std dev = 151 us

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=10 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=192MiB/s][w=49.1k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5846: Mon Jan 30 12:07:30 2023

write: IOPS=49.0k, BW=191MiB/s (201MB/s)(1913MiB/10001msec); 0 zone resets

slat (nsec): min=1149, max=2444.8k, avg=3780.45, stdev=8423.91

clat (usec): min=17, max=19475, avg=304.18, stdev=151.42

lat (usec): min=92, max=19478, avg=307.96, stdev=151.36

clat percentiles (usec):

| 1.00th=[ 159], 5.00th=[ 196], 10.00th=[ 210], 20.00th=[ 247],

| 30.00th=[ 277], 40.00th=[ 293], 50.00th=[ 306], 60.00th=[ 318],

| 70.00th=[ 330], 80.00th=[ 343], 90.00th=[ 375], 95.00th=[ 420],

| 99.00th=[ 519], 99.50th=[ 537], 99.90th=[ 594], 99.95th=[ 635],

| 99.99th=[ 1205]

bw ( KiB/s): min=181572, max=224247, per=100.00%, avg=196069.00, stdev=9980.14, samples=19

iops : min=45393, max=56061, avg=49016.74, stdev=2494.90, samples=19

lat (usec) : 20=0.01%, 50=0.01%, 100=0.05%, 250=20.89%, 500=77.45%

lat (usec) : 750=1.59%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 20=0.01%

cpu : usr=9.61%, sys=21.87%, ctx=146140, majf=0, minf=1

IO depths : 1=0.2%, 2=3.5%, 4=18.5%, 8=63.4%, 16=14.4%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=93.6%, 8=1.6%, 16=4.8%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,489805,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Intel P4800X – 8 second test

IOPS = 80.7k (max = 86.3k, so might still be hitting HDD mirror limits), median latency = 172 us, std dev = 86 us. These numbers are fantastic, especially for 4k blocksize. The throughput is 331MB/s. You will have a hard time getting your hands on a faster device unless you have $xx,xxx to spend.

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=16 --runtime=8 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=16

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=277MiB/s][w=70.9k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5906: Mon Jan 30 12:09:02 2023

write: IOPS=80.7k, BW=315MiB/s (331MB/s)(2522MiB/8001msec); 0 zone resets

slat (nsec): min=1264, max=13894k, avg=4853.54, stdev=20889.14

clat (usec): min=14, max=14173, avg=174.95, stdev=84.46

lat (usec): min=55, max=14176, avg=179.81, stdev=86.27

clat percentiles (usec):

| 1.00th=[ 84], 5.00th=[ 116], 10.00th=[ 135], 20.00th=[ 149],

| 30.00th=[ 159], 40.00th=[ 165], 50.00th=[ 172], 60.00th=[ 178],

| 70.00th=[ 184], 80.00th=[ 192], 90.00th=[ 210], 95.00th=[ 233],

| 99.00th=[ 367], 99.50th=[ 441], 99.90th=[ 644], 99.95th=[ 734],

| 99.99th=[ 1037]

bw ( KiB/s): min=275345, max=345029, per=100.00%, avg=328385.13, stdev=16226.53, samples=15

iops : min=68836, max=86259, avg=82095.93, stdev=4056.60, samples=15

lat (usec) : 20=0.01%, 50=0.02%, 100=2.65%, 250=93.56%, 500=3.47%

lat (usec) : 750=0.25%, 1000=0.03%

lat (msec) : 2=0.01%, 10=0.01%, 20=0.01%

cpu : usr=15.90%, sys=33.75%, ctx=232026, majf=0, minf=1

IO depths : 1=0.2%, 2=2.9%, 4=18.1%, 8=64.7%, 16=14.1%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=93.3%, 8=1.8%, 16=4.9%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,645590,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Queue Depth = 1 tests

Where the Optane drives really shine is with low queue depth operations. I reran the tests with QD=1 (5 second test to simulate a burst of writes) for the P4800X, the P1600X, and the SS300.

P4800X queue depth = 1

IOPS = 20.2k, median latency = 45 us, std dev = 129 us.

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=1 --runtime=5 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=1

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=73.7MiB/s][w=18.9k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5920: Mon Jan 30 12:09:42 2023

write: IOPS=20.2k, BW=78.8MiB/s (82.6MB/s)(394MiB/5001msec); 0 zone resets

slat (nsec): min=1413, max=266338, avg=1671.21, stdev=1557.28

clat (usec): min=16, max=40903, avg=47.37, stdev=128.99

lat (usec): min=43, max=40905, avg=49.04, stdev=129.01

clat percentiles (usec):

| 1.00th=[ 43], 5.00th=[ 44], 10.00th=[ 44], 20.00th=[ 44],

| 30.00th=[ 44], 40.00th=[ 44], 50.00th=[ 45], 60.00th=[ 45],

| 70.00th=[ 46], 80.00th=[ 47], 90.00th=[ 50], 95.00th=[ 68],

| 99.00th=[ 85], 99.50th=[ 92], 99.90th=[ 115], 99.95th=[ 133],

| 99.99th=[ 245]

bw ( KiB/s): min=68448, max=85351, per=100.00%, avg=80968.44, stdev=6282.37, samples=9

iops : min=17112, max=21337, avg=20241.78, stdev=1570.39, samples=9

lat (usec) : 20=0.01%, 50=89.91%, 100=9.81%, 250=0.27%, 500=0.01%

lat (msec) : 50=0.01%

cpu : usr=4.02%, sys=7.56%, ctx=101209, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,100846,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

P1600X queue depth = 1

IOPS = 13.7k, median latency = 63 us, std dev = 134 us

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=1 --runtime=5 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=1

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=55.0MiB/s][w=14.1k IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=5969: Mon Jan 30 12:10:43 2023

write: IOPS=13.7k, BW=53.5MiB/s (56.1MB/s)(268MiB/5001msec); 0 zone resets

slat (nsec): min=1344, max=82121, avg=1741.86, stdev=1171.68

clat (usec): min=45, max=34906, avg=70.64, stdev=134.05

lat (usec): min=61, max=34908, avg=72.38, stdev=134.11

clat percentiles (usec):

| 1.00th=[ 62], 5.00th=[ 62], 10.00th=[ 62], 20.00th=[ 63],

| 30.00th=[ 63], 40.00th=[ 63], 50.00th=[ 63], 60.00th=[ 64],

| 70.00th=[ 64], 80.00th=[ 71], 90.00th=[ 95], 95.00th=[ 117],

| 99.00th=[ 123], 99.50th=[ 125], 99.90th=[ 135], 99.95th=[ 147],

| 99.99th=[ 176]

bw ( KiB/s): min=55067, max=56348, per=100.00%, avg=55753.33, stdev=498.73, samples=9

iops : min=13766, max=14087, avg=13937.89, stdev=124.76, samples=9

lat (usec) : 50=0.01%, 100=92.75%, 250=7.25%

lat (msec) : 50=0.01%

cpu : usr=2.88%, sys=5.60%, ctx=68708, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,68540,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

SS300 queue depth = 1

Back to reality with a very high performing SAS-3 SSD.

IOPS = 6.4k, median latency = 151 us, std dev = 93 us

The P4800X is 3x faster than this very, very fast SSD, P1600X is 2x faster.

root@truenas-datto[/bench-pool]# rm -f random-write.0.0 && fio --name=random-write --ioengine=posixaio --rw=randwrite --bs=4k --numjobs=1 --size=4g --iodepth=1 --runtime=5 --time_based

random-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=posixaio, iodepth=1

fio-3.33

Starting 1 process

random-write: Laying out IO file (1 file / 4096MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=24.9MiB/s][w=6363 IOPS][eta 00m:00s]

random-write: (groupid=0, jobs=1): err= 0: pid=6023: Mon Jan 30 12:11:23 2023

write: IOPS=6379, BW=24.9MiB/s (26.1MB/s)(125MiB/5001msec); 0 zone resets

slat (nsec): min=1350, max=49617, avg=2134.61, stdev=1266.22

clat (usec): min=125, max=16182, avg=153.86, stdev=93.01

lat (usec): min=129, max=16183, avg=155.99, stdev=93.23

clat percentiles (usec):

| 1.00th=[ 131], 5.00th=[ 133], 10.00th=[ 133], 20.00th=[ 133],

| 30.00th=[ 135], 40.00th=[ 135], 50.00th=[ 151], 60.00th=[ 155],

| 70.00th=[ 159], 80.00th=[ 169], 90.00th=[ 200], 95.00th=[ 202],

| 99.00th=[ 206], 99.50th=[ 208], 99.90th=[ 235], 99.95th=[ 255],

| 99.99th=[ 537]

bw ( KiB/s): min=25434, max=25887, per=100.00%, avg=25632.00, stdev=152.43, samples=9

iops : min= 6358, max= 6471, avg=6407.56, stdev=38.13, samples=9

lat (usec) : 250=99.94%, 500=0.05%, 750=0.01%, 1000=0.01%

lat (msec) : 20=0.01%

cpu : usr=1.72%, sys=3.26%, ctx=31941, majf=0, minf=1

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,31903,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

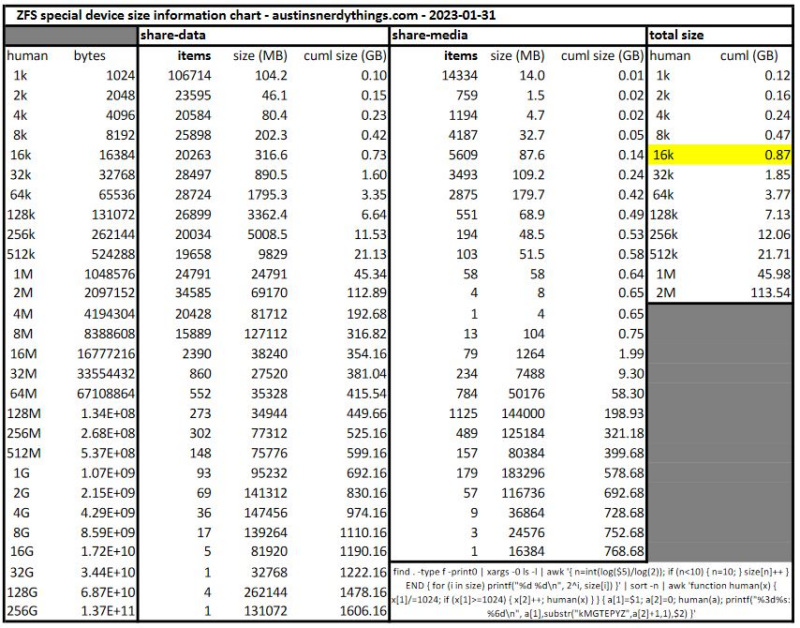

Last test, using a P1600X as a ZFS special allocation device (special vdev)

The Intel engineer I was working with requested I demonstrate the potential of a P1600X as a ZFS special allocation device (special device/special vdev). They did not have a specific test in mind. A special device stores metadata about the files on the pool, and can additionally store small blocks (as set with the special_small_blocks property of the dataset). If you set special_small_blocks to 16k, for example, any file that is smaller than 16k will be written to the special device directly instead of the main pool disks. As you might imagine, this can really speed up transfers of small files. Note: since the special device stores all metadata about data on the pool, and potentially data itself, it is critical that it is at least a mirror (some use 3-way mirrors). If you lose the special device vdev, you lose the entire pool! Since I am just benchmarking, I do not have a mirrored special vdev. I can remove it whenever I want since the underlying pool is comprised of mirrors.

The test procedure is as follows:

- Reboot machine to clear ARC

- Run a command that reads metadata for every file on a dataset. The specific command prints out a distribution of file sizes, which I then used to calculate what I should use for special_small_blocks.

- Note the time it took to run said command

- Wipe pool

- Add special device to pool

- Transfer all data back to pool

- Rerun command and note time difference.

The “wipe pool” and “transfer all data back to pool” steps make this test something you don’t want to repeat (as with many other ZFS things, such as changing compression type or recordsize, special device metadata is only written upon writes. it will not backfill).

Command used to for special_small_blocks calculation

I found this on level1techs.com:

find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size[i]) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

It prints out a list of sizes and how many entites are in that size bin. I threw ‘time’ in front to see how long it takes to run. I ran it once for each dataset. This is my media dataset (movies, music, tv shows, etc.)

root@truenas-datto[/mnt/test-pool/share-media]# time find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size[i]) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

1k: 14336

2k: 759

4k: 1194

8k: 4188

16k: 5609

32k: 3498

64k: 2882

128k: 552

256k: 194

512k: 103

1M: 58

2M: 6

4M: 2

8M: 14

16M: 98

32M: 253

64M: 793

128M: 1130

256M: 529

512M: 322

1G: 185

2G: 60

4G: 9

8G: 3

16G: 1

find . -type f -print0 0.15s user 2.10s system 6% cpu 37.210 total

xargs -0 ls -l 1.23s user 0.24s system 3% cpu 37.213 total

awk 0.07s user 0.02s system 0% cpu 37.213 total

sort -n 0.00s user 0.00s system 0% cpu 37.213 total

awk 0.00s user 0.00s system 0% cpu 37.212 total

37.2 seconds total to run.

And on my ‘data’ dataset (backups, general file storage, etc.):

root@truenas-datto[/mnt/test-pool/share-data]# time find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size[i]) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

1k: 106714

2k: 23595

4k: 20593

8k: 25994

16k: 20264

32k: 28506

64k: 28759

128k: 26909

256k: 20027

512k: 19708

1M: 24780

2M: 34587

4M: 20427

8M: 15888

16M: 2388

32M: 983

64M: 558

128M: 272

256M: 260

512M: 179

1G: 93

2G: 69

4G: 36

8G: 17

16G: 5

32G: 1

128G: 4

256G: 1

find . -type f -print0 0.36s user 5.46s system 3% cpu 2:43.07 total

xargs -0 ls -l 10.46s user 2.55s system 7% cpu 2:43.13 total

awk 0.78s user 0.02s system 0% cpu 2:43.13 total

sort -n 0.00s user 0.00s system 0% cpu 2:43.13 total

awk 0.00s user 0.00s system 0% cpu 2:43.13 total

2m43s. We have a baseline.

I also threw together a spreadsheet to do a cumulative sum on how much space is used by each bin. I decided to go with 16k, which should only use 0.87 GB of the 58 GB Optane P1600X for small files.

Rebuilding the pool with a special allocation device

I use syncoid exclusively to synchronize ZFS pools across machines/systems. Sanoid generates the snapshots. These are great tools written by Jim Salter. I transferred with option special_small_blocks=16k so the property was set immediately. Syncoid won’t write to an empty, already created dataset. It took a while to transfer 3.7TB, even over a 10G link.

-- add 2x more WD Reds, wipe pools, recreate pools, remove p1600x as slog, re-add as special dev

root@truenas-datto[~]# zpool destroy bench-pool

zpool create -o ashift=12 test-pool mirror /dev/da0 /dev/da1 mirror /dev/da2 /dev/da3

zpool add -f test-pool special /dev/nvd1

root@truenas-datto[~]# smartctl -a /dev/nvme1

=== START OF INFORMATION SECTION ===

Model Number: INTEL SSDPEK1A058GA

Serial Number: PHOC209200KC058A

root@truenas-datto[~]# zpool status test-pool

pool: test-pool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

test-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

da0 ONLINE 0 0 0

da1 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

da2 ONLINE 0 0 0

da3 ONLINE 0 0 0

special

nvd1 ONLINE 0 0 0

errors: No known data errors

syncoid --no-sync-snap --recvoptions="o special_small_blocks=16k" [email protected]:big/share-media test-pool/share-media

root@truenas-datto[~]# zfs get special_small_blocks test-pool/share-media

NAME PROPERTY VALUE SOURCE

test-pool/share-media special_small_blocks 16K local

-----------------------------------------------------------------------------------------------------------------------------

-- NOTE: this zpool list was captured approximately 1 minute into the data transfer, hence why the nvd1 ALLOC is only 24.7M -

-----------------------------------------------------------------------------------------------------------------------------

root@truenas-datto[~]# zpool list -v test-pool

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

test-pool 7.30T 15.1G 7.29T - - 0% 0% 1.00x ONLINE /mnt

mirror-0 3.62T 7.22G 3.62T - - 0% 0.19% - ONLINE

da0 3.64T - - - - - - - ONLINE

da1 3.64T - - - - - - - ONLINE

mirror-1 3.62T 7.87G 3.62T - - 0% 0.21% - ONLINE

da2 3.64T - - - - - - - ONLINE

da3 3.64T - - - - - - - ONLINE

special - - - - - - - - -

nvd1 54.9G 24.7M 54.5G - - 0% 0.04% - ONLINE

Special device metadata reading results

The same command as before for the ‘media’ dataset:

root@truenas-datto[/mnt/test-pool/share-media]# time find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size[i]) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

1k: 14336

2k: 759

4k: 1194

...

4G: 9

8G: 3

16G: 1

find . -type f -print0 0.14s user 1.53s system 51% cpu 3.254 total

xargs -0 ls -l 1.22s user 0.23s system 43% cpu 3.370 total

awk 0.08s user 0.00s system 2% cpu 3.370 total

sort -n 0.00s user 0.00s system 0% cpu 3.370 total

awk 0.00s user 0.00s system 0% cpu 3.369 total

And for the ‘data’ dataset:

root@truenas-datto[/mnt/test-pool/share-media]# cd ../share-data

root@truenas-datto[/mnt/test-pool/share-data]# time find . -type f -print0 | xargs -0 ls -l | awk '{ n=int(log($5)/log(2)); if (n<10) { n=10; } size[n]++ } END { for (i in size) printf("%d %d\n", 2^i, size[i]) }' | sort -n | awk 'function human(x) { x[1]/=1024; if (x[1]>=1024) { x[2]++; human(x) } } { a[1]=$1; a[2]=0; human(a); printf("%3d%s: %6d\n", a[1],substr("kMGTEPYZ",a[2]+1,1),$2) }'

1k: 106714

2k: 23595

4k: 20593

...

32G: 1

128G: 4

256G: 1

find . -type f -print0 0.24s user 3.80s system 24% cpu 16.282 total

xargs -0 ls -l 10.29s user 2.39s system 77% cpu 16.345 total

awk 0.78s user 0.02s system 4% cpu 16.345 total

sort -n 0.00s user 0.00s system 0% cpu 16.345 total

awk 0.00s user 0.00s system 0% cpu 16.345 total

To summarize the special device metadata access times (all times in seconds), it reduced the amount of time required to traverse all file metadata by 90%. That said, there was a similar reduction if I ran the command multiple times in a row without a special vdev due to ZFS’ ARC caching the metadata. It would be trivial to write a cron task that would iterate over files on a regular basis to keep that data in ARC as frequently accessed data.

| share-media | share-data | |

| no special vdev (s) | 37.2 | 163.07 |

| with special vdev (s) | 3.25 | 16.28 |

| delta (s) | -33.95 | -146.79 |

| delta % | -91.3% | -90.0% |

Special device size utilization

As far as I can tell, there isn’t really a good way to calcuate how much space will be used by the special device storing metadata and such. In my case, 3.74TB of data with special_small_blocks=16k resulted in 11.9GB being used on the special device:

root@truenas-datto[/mnt/test-pool/share-data]# zpool list -v test-pool

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

test-pool 7.30T 3.74T 3.57T - - 0% 51% 1.00x ONLINE /mnt

mirror-0 3.62T 1.78T 1.84T - - 0% 49.1% - ONLINE

da0 3.64T - - - - - - - ONLINE

da1 3.64T - - - - - - - ONLINE

mirror-1 3.62T 1.94T 1.68T - - 0% 53.6% - ONLINE

da2 3.64T - - - - - - - ONLINE

da3 3.64T - - - - - - - ONLINE

special - - - - - - - - -

nvd1 54.9G 11.9G 42.6G - - 1% 21.8% - ONLINE

Conclusion

If you’ve made it this far, congrats. I didn’t intend to write this many words on ZFS benchmarks but here we are. Hopefully you found this data interesting/insightful and/or learned something from it. At a minimum, you should now be able to calculate how much space you need if you want to utilize a special vdev. Also special thanks to Intel for sending me Optane samples to play around with and benchmark.

Here’s a table with a summary of the results:

Please let me know in the comments of this post if you’d like me to re-run any benchmarks, or have any questions/comments/concerns about the process!

2 replies on “ZFS SLOG Performance Testing of SSDs including Intel P4800X to Samsung 850 Evo”

Awesome work. Thanks for the insights!

[…] a follow-up to my last post (ZFS SLOG Performance Testing of SSDs including Intel P4800X to Samsung 850 Evo), I wanted to focus specifically on the Intel Optane devices in a slightly faster test machine. […]