Background

The last post covered how to deploy virtual machines in Proxmox with Terraform. This post shows the template for deploying 4 Kubernetes virtual machines in Proxmox using Terraform.

Youtube Video Link

Kubernetes Proxmox Terraform Template

Without further ado, below is the template I used to create my virtual machines. The main LAN network is 10.98.1.0/24, and the Kube internal network (on its own bridge) is 10.17.0.0/24.

This template creates a Kube server, two agents, and a storage server.

Update 2022-04-26: bumped Telmate provider version to 2.9.8 from 2.7.4

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "2.9.8"

}

}

}

provider "proxmox" {

pm_api_url = "https://prox-1u.home.fluffnet.net:8006/api2/json" # change this to match your own proxmox

pm_api_token_id = [secret]

pm_api_token_secret = [secret]

pm_tls_insecure = true

}

resource "proxmox_vm_qemu" "kube-server" {

count = 1

name = "kube-server-0${count.index + 1}"

target_node = "prox-1u"

# thanks to Brian on YouTube for the vmid tip

# http://www.youtube.com/channel/UCTbqi6o_0lwdekcp-D6xmWw

vmid = "40${count.index + 1}"

clone = "ubuntu-2004-cloudinit-template"

agent = 1

os_type = "cloud-init"

cores = 2

sockets = 1

cpu = "host"

memory = 4096

scsihw = "virtio-scsi-pci"

bootdisk = "scsi0"

disk {

slot = 0

size = "10G"

type = "scsi"

storage = "local-zfs"

#storage_type = "zfspool"

iothread = 1

}

network {

model = "virtio"

bridge = "vmbr0"

}

network {

model = "virtio"

bridge = "vmbr17"

}

lifecycle {

ignore_changes = [

network,

]

}

ipconfig0 = "ip=10.98.1.4${count.index + 1}/24,gw=10.98.1.1"

ipconfig1 = "ip=10.17.0.4${count.index + 1}/24"

sshkeys = <<EOF

${var.ssh_key}

EOF

}

resource "proxmox_vm_qemu" "kube-agent" {

count = 2

name = "kube-agent-0${count.index + 1}"

target_node = "prox-1u"

vmid = "50${count.index + 1}"

clone = "ubuntu-2004-cloudinit-template"

agent = 1

os_type = "cloud-init"

cores = 2

sockets = 1

cpu = "host"

memory = 4096

scsihw = "virtio-scsi-pci"

bootdisk = "scsi0"

disk {

slot = 0

size = "10G"

type = "scsi"

storage = "local-zfs"

#storage_type = "zfspool"

iothread = 1

}

network {

model = "virtio"

bridge = "vmbr0"

}

network {

model = "virtio"

bridge = "vmbr17"

}

lifecycle {

ignore_changes = [

network,

]

}

ipconfig0 = "ip=10.98.1.5${count.index + 1}/24,gw=10.98.1.1"

ipconfig1 = "ip=10.17.0.5${count.index + 1}/24"

sshkeys = <<EOF

${var.ssh_key}

EOF

}

resource "proxmox_vm_qemu" "kube-storage" {

count = 1

name = "kube-storage-0${count.index + 1}"

target_node = "prox-1u"

vmid = "60${count.index + 1}"

clone = "ubuntu-2004-cloudinit-template"

agent = 1

os_type = "cloud-init"

cores = 2

sockets = 1

cpu = "host"

memory = 4096

scsihw = "virtio-scsi-pci"

bootdisk = "scsi0"

disk {

slot = 0

size = "20G"

type = "scsi"

storage = "local-zfs"

#storage_type = "zfspool"

iothread = 1

}

network {

model = "virtio"

bridge = "vmbr0"

}

network {

model = "virtio"

bridge = "vmbr17"

}

lifecycle {

ignore_changes = [

network,

]

}

ipconfig0 = "ip=10.98.1.6${count.index + 1}/24,gw=10.98.1.1"

ipconfig1 = "ip=10.17.0.6${count.index + 1}/24"

sshkeys = <<EOF

${var.ssh_key}

EOF

}

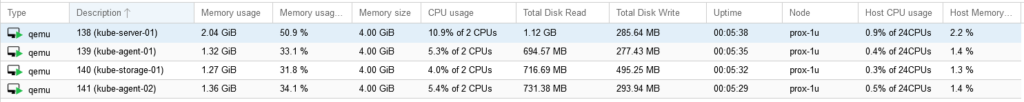

After running Terraform plan and apply, you should have 4 new VMs in your Proxmox cluster:

Conclusion

You now have 4 VMs ready for Kubernetes installation. The next post shows how to deploy a Kubernetes cluster with Ansible.

9 replies on “Deploying Kubernetes VMs in Proxmox with Terraform”

[…] glad I worked through everything and can pass it along. Check back soon for my next post on using Terraform to deploy a full set of Kubernetes machines to a Proxmox cluster (and thrilling sequel to that post, Using Ansible to bootstrap a Kubernetes […]

Hi, I just discovered your blog over the terraform proxmox video on youtube. I hope you will continue for a long time because i am already addicted to your straight forward and reasonable tutorials.

Greetings from Germany

Hi Eike, I do plan to continue! We have a 15 month old at home though so time is sparse. These Proxmox/Terraform articles are some of the most popular so my next post will probably be ‘using Ansible to bootstrap a Kubernetes cluster’, which I mentioned in the post and video (quite a while ago at this point). It is overdue!

Awesome post! Thank you. Please, please, continue writing.

awesome work ! thanks mate !

Hi, great articles but i’m stucked and have a question 🙂 Can you show me how you have created vmbr17 in proxmox for kube network ?

Pretty sure I did it manually in the Proxmox interface. Go to the Web UI -> expand datacenter -> click on the node you want to have the new VMBR -> network -> create -> linux bridge -> name it whatever you want (e.g. vmbr17), autostart=checked, create. If you give it an IP address, the proxmox node will gain that network address as well. If you don’t give it an IP address, Proxmox itself won’t use that bridge.

Thank you very much Master: it worked in my homelab.

Now I will apply the Kubernetes cluster.

Congratulations on your baby (I’m late, but it’s never too late to wish someone well).

It took me a while to spin it up.

I’m not sure if my environment is a lot different from yours.

– Proxmox 8

– Telmate/proxmox 3.0.1-rc4

– Storage is lvm-thin

I had to change a bit the code for the disks and boot order, and I switched scsihw to virtio-scsi-single just only because of a warning on iothread*.

If anyone needs it:

scsihw = “virtio-scsi-single”

boot = “order=scsi0”

disks {

ide {

ide3{

cloudinit {

storage = “lvm-thin”

}

}

}

scsi {

scsi0 {

disk {

# Beware of sloth

#slot = 0

size = “10G”

storage = “lvm-thin”

iothread = true

}

}

}

}

* For iothread I was getting:

iothread is only valid with virtio disk or virtio-scsi-single controller, ignoring