Background

I’d like to learn Kubernetes and DevOps. A Kubernetes cluster requires at least 3 VMs/bare metal machines. In my last post, I wrote about how to create a Ubuntu cloud-init template for Proxmox. In this post, we’ll take that template and use it to deploy a couple VMs via automation using Terraform. If you don’t have a template, you need one before proceeding.

Overview

- Install Terraform

- Determine authentication method for Terraform to interact with Proxmox (user/pass vs API keys)

- Terraform basic initialization and provider installation

- Develop Terraform plan

- Terraform plan

- Run Terraform plan and watch the VMs appear!

Youtube Video Link

If you prefer video versions to follow along, please head on over to https://youtu.be/UXXIl421W8g for a live-action video of me deploying virtual machines in Proxmox using Terraform and why we’re running each command.

#1 – Install Terraform

curl -fsSL https://apt.releases.hashicorp.com/gpg | sudo apt-key add - sudo apt-add-repository "deb [arch=$(dpkg --print-architecture)] https://apt.releases.hashicorp.com $(lsb_release -cs) main" sudo apt update sudo apt install terraform

#2 – Determine Authentication Method (use API keys)

You have two options here:

- Username/password – you can use the existing default root user and root password here to make things easy… or

- API keys – this involves setting up a new user, giving that new user the required permissions, and then setting up API keys so that user doesn’t have to type in a password to perform actions

I went with the API key method since it is not desirable to have your root password sitting in Terraform files (even as an environment variable isn’t a great idea). I didn’t really know what I was doing and I basically gave the new user full admin permissions anyways. Should I lock it down? Surely. Do I know what the minimum required permissions are to do so? Nope. If someone in the comments or on Reddit could enlighten me, I’d really appreciate it!

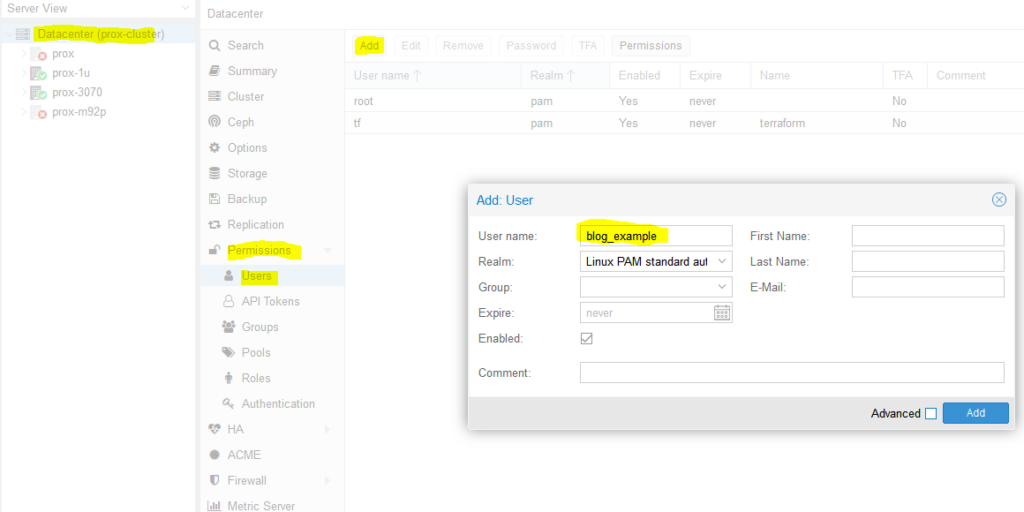

So we need to create a new user. We’ll name it ‘blog_example’. To add a new user go to Datacenter in the left tab, then Permissions -> Users -> Click add, name the user and click add.

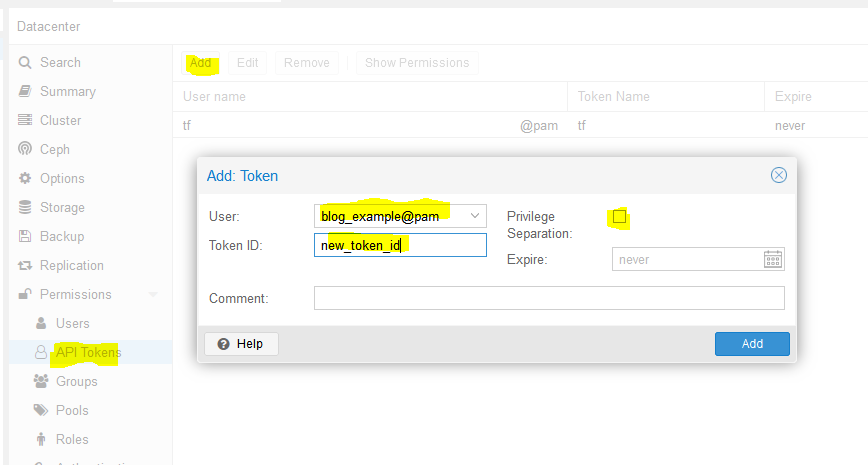

Next, we need to add API tokens. Click API tokens below users in the permissions category and click add. Select the user you just created and give the token an ID, and uncheck privilege separation (which means we want the token to have the same permissions as the user):

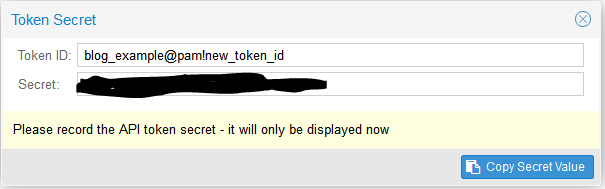

When you click Add it will show you the key. Save this key. It will never be displayed again!

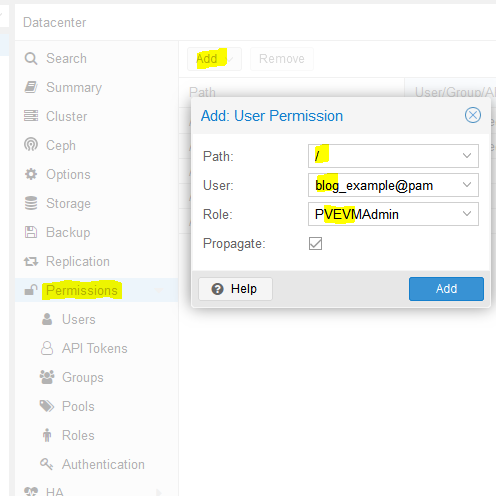

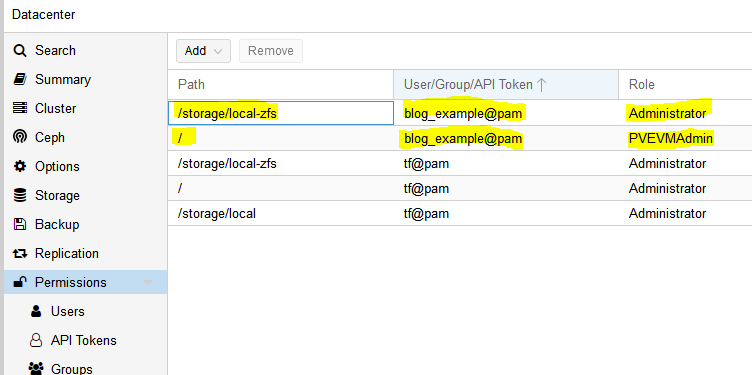

Next we need to add a role to the new user. Permissions -> Add -> Path = ‘/’, User is the one you just made, role = ‘PVEVMAdmin’. This gives the user (and associated API token!) rights to all nodes (the / for path) to do VMAdmin activities:

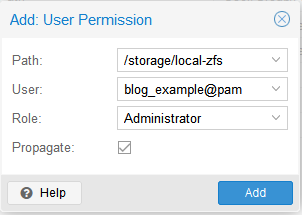

You also need to add permissions to the storage used by the VMs you want to deploy (both from and to), for me this is /storage/local-zfs (might be /storage/local-lvm for you). Add that too in the path section. Use Admin for the role here because the user also needs the ability to allocate space in the datastore (you could use PVEVMAdmin + a datastore role but I haven’t dove into which one yet):

At this point we are done with the permissions:

It is time to turn to Terraform.

3 – Terraform basic information and provider installation

Terraform has three main stages: init, plan, and apply. We will start with describing the plans, which can be thought of a a type of configuration file for what you want to do. Plans are files stored in directories. Make a new directory (terraform-blog), and create two files: main.tf and vars.tf:

cd ~ mkdir terraform-blog && cd terraform-blog touch main.tf vars.tf

The two files are hopefully reasonably named. The main content will be in main.tf and we will put a few variables in vars.tf. Everything could go in main.tf but it is a good practice to start splitting things out early. I actually don’t have as much in vars.tf as I should but we all gotta start somewhere

Ok so in main.tf let’s add the bare minimum. We need to tell Terraform to use a provider, which is the term they use for the connector to the entity Terraform will be interacting with. Since we are using Proxmox, we need to use a Proxmox provider. This is actually super easy – we just need to specify the name and version and Terraform goes out and grabs it from github and installs it. I used the Telmate Proxmox provider.

main.tf:

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "2.7.4"

}

}

}

Save the file. Now we’ll initialize Terraform with our barebones plan (terraform init), which will force it to go out and grab the provider. If all goes well, we will be informed that the provider was installed and that Terraform has been initialized. Terraform is also really nice in that it tells you the next step towards the bottom of the output (“try running ‘terraform plan’ next”).

austin@EARTH:/mnt/c/Users/Austin/terraform-blog$ terraform init Initializing the backend... Initializing provider plugins... - Finding telmate/proxmox versions matching "2.7.4"... - Installing telmate/proxmox v2.7.4... - Installed telmate/proxmox v2.7.4 (self-signed, key ID A9EBBE091B35AFCE) Partner and community providers are signed by their developers. If you'd like to know more about provider signing, you can read about it here: https://www.terraform.io/docs/cli/plugins/signing.html Terraform has created a lock file .terraform.lock.hcl to record the provider selections it made above. Include this file in your version control repository so that Terraform can guarantee to make the same selections by default when you run "terraform init" in the future. Terraform has been successfully initialized! You may now begin working with Terraform. Try running "terraform plan" to see any changes that are required for your infrastructure. All Terraform commands should now work. If you ever set or change modules or backend configuration for Terraform, rerun this command to reinitialize your working directory. If you forget, other commands will detect it and remind you to do so if necessary.

4 – Develop Terraform plan

Alright with the provider installed, it is time to use it to deploy a VM. We will use the template we created in the last post (How to create a Proxmox Ubuntu cloud-init image). Alter your main.tf file to be the following. I break it down inside the file with comments

terraform {

required_providers {

proxmox = {

source = "telmate/proxmox"

version = "2.7.4"

}

}

}

provider "proxmox" {

# url is the hostname (FQDN if you have one) for the proxmox host you'd like to connect to to issue the commands. my proxmox host is 'prox-1u'. Add /api2/json at the end for the API

pm_api_url = "https://prox-1u:8006/api2/json"

# api token id is in the form of: <username>@pam!<tokenId>

pm_api_token_id = "blog_example@pam!new_token_id"

# this is the full secret wrapped in quotes. don't worry, I've already deleted this from my proxmox cluster by the time you read this post

pm_api_token_secret = "9ec8e608-d834-4ce5-91d2-15dd59f9a8c1"

# leave tls_insecure set to true unless you have your proxmox SSL certificate situation fully sorted out (if you do, you will know)

pm_tls_insecure = true

}

# resource is formatted to be "[type]" "[entity_name]" so in this case

# we are looking to create a proxmox_vm_qemu entity named test_server

resource "proxmox_vm_qemu" "test_server" {

count = 1 # just want 1 for now, set to 0 and apply to destroy VM

name = "test-vm-${count.index + 1}" #count.index starts at 0, so + 1 means this VM will be named test-vm-1 in proxmox

# this now reaches out to the vars file. I could've also used this var above in the pm_api_url setting but wanted to spell it out up there. target_node is different than api_url. target_node is which node hosts the template and thus also which node will host the new VM. it can be different than the host you use to communicate with the API. the variable contains the contents "prox-1u"

target_node = var.proxmox_host

# another variable with contents "ubuntu-2004-cloudinit-template"

clone = var.template_name

# basic VM settings here. agent refers to guest agent

agent = 1

os_type = "cloud-init"

cores = 2

sockets = 1

cpu = "host"

memory = 2048

scsihw = "virtio-scsi-pci"

bootdisk = "scsi0"

disk {

slot = 0

# set disk size here. leave it small for testing because expanding the disk takes time.

size = "10G"

type = "scsi"

storage = "local-zfs"

iothread = 1

}

# if you want two NICs, just copy this whole network section and duplicate it

network {

model = "virtio"

bridge = "vmbr0"

}

# not sure exactly what this is for. presumably something about MAC addresses and ignore network changes during the life of the VM

lifecycle {

ignore_changes = [

network,

]

}

# the ${count.index + 1} thing appends text to the end of the ip address

# in this case, since we are only adding a single VM, the IP will

# be 10.98.1.91 since count.index starts at 0. this is how you can create

# multiple VMs and have an IP assigned to each (.91, .92, .93, etc.)

ipconfig0 = "ip=10.98.1.9${count.index + 1}/24,gw=10.98.1.1"

# sshkeys set using variables. the variable contains the text of the key.

sshkeys = <<EOF

${var.ssh_key}

EOF

}

There is a good amount going on in here. Hopefully the embedded comments explain everything. If not, let me know in the comments or on Reddit (u/Nerdy-Austin).

Now for the vars.tf file. This is a bit easier to understand. Just declare a variable, give it a name, and a default value. That’s all I know at this point and it works.

variable "ssh_key" {

default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDcwZAOfqf6E6p8IkrurF2vR3NccPbMlXFPaFe2+Eh/8QnQCJVTL6PKduXjXynuLziC9cubXIDzQA+4OpFYUV2u0fAkXLOXRIwgEmOrnsGAqJTqIsMC3XwGRhR9M84c4XPAX5sYpOsvZX/qwFE95GAdExCUkS3H39rpmSCnZG9AY4nPsVRlIIDP+/6YSy9KWp2YVYe5bDaMKRtwKSq3EOUhl3Mm8Ykzd35Z0Cysgm2hR2poN+EB7GD67fyi+6ohpdJHVhinHi7cQI4DUp+37nVZG4ofYFL9yRdULlHcFa9MocESvFVlVW0FCvwFKXDty6askpg9yf4FnM0OSbhgqXzD austin@EARTH"

}

variable "proxmox_host" {

default = "prox-1u"

}

variable "template_name" {

default = "ubuntu-2004-cloudinit-template"

}

5 – Terraform plan (official term for “what will Terraform do next”)

Now with the .tf files completed, we can run the plan (terraform plan). We defined a count=1 resource, so we would expect Terraform to create a single VM. Let’s have Terraform run through the plan and tell us what it intends to do. It tells us a lot.

austin@EARTH:/mnt/c/Users/Austin/terraform-blog$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions

are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# proxmox_vm_qemu.test_server[0] will be created

+ resource "proxmox_vm_qemu" "test_server" {

+ additional_wait = 15

+ agent = 1

+ balloon = 0

+ bios = "seabios"

+ boot = "cdn"

+ bootdisk = "scsi0"

+ clone = "ubuntu-2004-cloudinit-template"

+ clone_wait = 15

+ cores = 2

+ cpu = "host"

+ default_ipv4_address = (known after apply)

+ define_connection_info = true

+ force_create = false

+ full_clone = true

+ guest_agent_ready_timeout = 600

+ hotplug = "network,disk,usb"

+ id = (known after apply)

+ ipconfig0 = "ip=10.98.1.91/24,gw=10.98.1.1"

+ kvm = true

+ memory = 2048

+ name = "test-vm-1"

+ nameserver = (known after apply)

+ numa = false

+ onboot = true

+ os_type = "cloud-init"

+ preprovision = true

+ reboot_required = (known after apply)

+ scsihw = "virtio-scsi-pci"

+ searchdomain = (known after apply)

+ sockets = 1

+ ssh_host = (known after apply)

+ ssh_port = (known after apply)

+ sshkeys = <<-EOT

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDcwZAOfqf6E6p8IkrurF2vR3NccPbMlXFPaFe2+Eh/8QnQCJVTL6PKduXjXynuLziC9cubXIDzQA+4OpFYUV2u0fAkXLOXRIwgEmOrnsGAqJTqIsMC3XwGRhR9M84c4XPAX5sYpOsvZX/qwFE95GAdExCUkS3H39rpmSCnZG9AY4nPsVRlIIDP+/6YSy9KWp2YVYe5bDaMKRtwKSq3EOUhl3Mm8Ykzd35Z0Cysgm2hR2poN+EB7GD67fyi+6ohpdJHVhinHi7cQI4DUp+37nVZG4ofYFL9yRdULlHcFa9MocESvFVlVW0FCvwFKXDty6askpg9yf4FnM0OSbhgqXzD austin@EARTH

EOT

+ target_node = "prox-1u"

+ unused_disk = (known after apply)

+ vcpus = 0

+ vlan = -1

+ vmid = (known after apply)

+ disk {

+ backup = 0

+ cache = "none"

+ file = (known after apply)

+ format = (known after apply)

+ iothread = 1

+ mbps = 0

+ mbps_rd = 0

+ mbps_rd_max = 0

+ mbps_wr = 0

+ mbps_wr_max = 0

+ media = (known after apply)

+ replicate = 0

+ size = "10G"

+ slot = 0

+ ssd = 0

+ storage = "local-zfs"

+ storage_type = (known after apply)

+ type = "scsi"

+ volume = (known after apply)

}

+ network {

+ bridge = "vmbr0"

+ firewall = false

+ link_down = false

+ macaddr = (known after apply)

+ model = "virtio"

+ queues = (known after apply)

+ rate = (known after apply)

+ tag = -1

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take

exactly these actions if you run "terraform apply" now.

You can see the output of the planning phase of Terraform. It is telling us it will create proxmox_vm_qemu.test_server[0] with a list of parameters. You can double-check the IP address here, as well as the rest of the basic settings. At the bottom is the summary – “Plan: 1 to add, 0 to change, 0 to destroy.” Also note that it tells us again what step to run next – “terraform apply”.

6 – Execute the Terraform plan and watch the VMs appear!

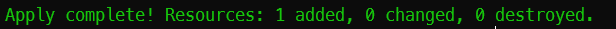

With the summary stating what we want, we can now apply the plan (terraform apply). Note that it prompts you to type in ‘yes’ to apply the changes after it determines what the changes are. It typically takes 1m15s +/- 15s for my VMs to get created.

If all goes well, you will be informed that 1 resource was added!

Command and full output:

austin@EARTH:/mnt/c/Users/Austin/terraform-blog$ terraform apply

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# proxmox_vm_qemu.test_server[0] will be created

+ resource "proxmox_vm_qemu" "test_server" {

+ additional_wait = 15

+ agent = 1

+ balloon = 0

+ bios = "seabios"

+ boot = "cdn"

+ bootdisk = "scsi0"

+ clone = "ubuntu-2004-cloudinit-template"

+ clone_wait = 15

+ cores = 2

+ cpu = "host"

+ default_ipv4_address = (known after apply)

+ define_connection_info = true

+ force_create = false

+ full_clone = true

+ guest_agent_ready_timeout = 600

+ hotplug = "network,disk,usb"

+ id = (known after apply)

+ ipconfig0 = "ip=10.98.1.91/24,gw=10.98.1.1"

+ kvm = true

+ memory = 2048

+ name = "test-vm-1"

+ nameserver = (known after apply)

+ numa = false

+ onboot = true

+ os_type = "cloud-init"

+ preprovision = true

+ reboot_required = (known after apply)

+ scsihw = "virtio-scsi-pci"

+ searchdomain = (known after apply)

+ sockets = 1

+ ssh_host = (known after apply)

+ ssh_port = (known after apply)

+ sshkeys = <<-EOT

ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDcwZAOfqf6E6p8IkrurF2vR3NccPbMlXFPaFe2+Eh/8QnQCJVTL6PKduXjXynuLziC9cubXIDzQA+4OpFYUV2u0fAkXLOXRIwgEmOrnsGAqJTqIsMC3XwGRhR9M84c4XPAX5sYpOsvZX/qwFE95GAdExCUkS3H39rpmSCnZG9AY4nPsVRlIIDP+/6YSy9KWp2YVYe5bDaMKRtwKSq3EOUhl3Mm8Ykzd35Z0Cysgm2hR2poN+EB7GD67fyi+6ohpdJHVhinHi7cQI4DUp+37nVZG4ofYFL9yRdULlHcFa9MocESvFVlVW0FCvwFKXDty6askpg9yf4FnM0OSbhgqXzD austin@EARTH

EOT

+ target_node = "prox-1u"

+ unused_disk = (known after apply)

+ vcpus = 0

+ vlan = -1

+ vmid = (known after apply)

+ disk {

+ backup = 0

+ cache = "none"

+ file = (known after apply)

+ format = (known after apply)

+ iothread = 1

+ mbps = 0

+ mbps_rd = 0

+ mbps_rd_max = 0

+ mbps_wr = 0

+ mbps_wr_max = 0

+ media = (known after apply)

+ replicate = 0

+ size = "10G"

+ slot = 0

+ ssd = 0

+ storage = "local-zfs"

+ storage_type = (known after apply)

+ type = "scsi"

+ volume = (known after apply)

}

+ network {

+ bridge = "vmbr0"

+ firewall = false

+ link_down = false

+ macaddr = (known after apply)

+ model = "virtio"

+ queues = (known after apply)

+ rate = (known after apply)

+ tag = -1

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

proxmox_vm_qemu.test_server[0]: Creating...

proxmox_vm_qemu.test_server[0]: Still creating... [10s elapsed]

proxmox_vm_qemu.test_server[0]: Still creating... [20s elapsed]

proxmox_vm_qemu.test_server[0]: Still creating... [30s elapsed]

proxmox_vm_qemu.test_server[0]: Still creating... [40s elapsed]

proxmox_vm_qemu.test_server[0]: Still creating... [50s elapsed]

proxmox_vm_qemu.test_server[0]: Still creating... [1m0s elapsed]

proxmox_vm_qemu.test_server[0]: Creation complete after 1m9s [id=prox-1u/qemu/142]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

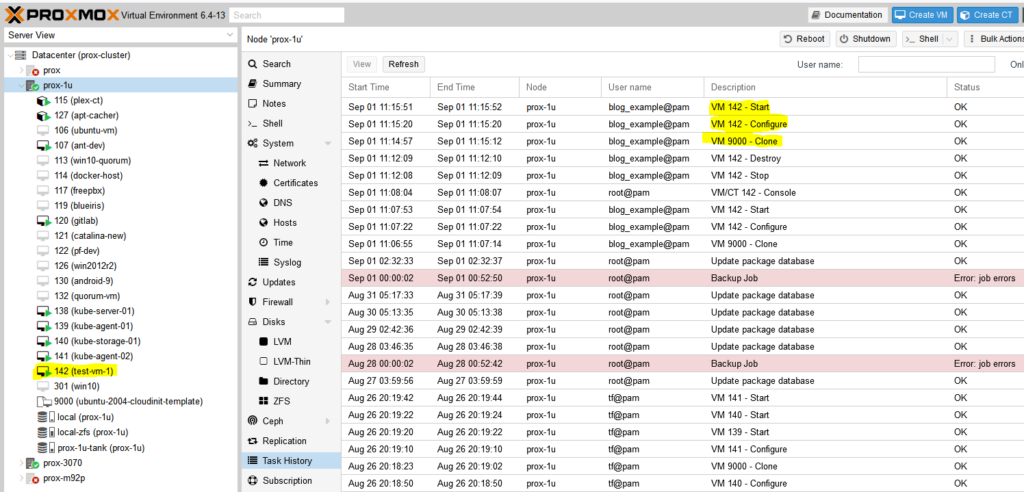

Now go check Proxmox and see if your VM was created:

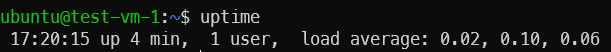

Success! You should now be able to SSH into the new VM with the key you already provided (note: the username will be ‘ubuntu’, not whatever you had set in your key).

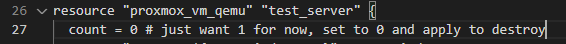

Last – Removing the test VM

I just set the count to 0 for the resource in the main.tf file and apply and the VM is stopped and destroyed.

Conclusion

This felt like a quick-n-dirty tutorial for how to use Terraform to deploy virtual machines in Proxmox but looking back, there is a decent amount of detail. It took me quite a while to work through permission issues, hostnames being invalid (turns out you can’t have underscores (_) in hostnames, duh, that took an hour to find), assigning roles to users vs the associated API keys, etc. but I’m glad I worked through everything and can pass it along. Check back soon for my next post on using Terraform to deploy a full set of Kubernetes machines to a Proxmox cluster (and thrilling sequel to that post, Using Ansible to bootstrap a Kubernetes Cluster)!

50 replies on “How to deploy VMs in Proxmox with Terraform”

[…] Next post – How to deploy VMs in Proxmox with Terraform […]

Hello,

Thank you for this post, it’s been very helpful! It does appear that when running terraform apply it will destroy the cloned guest, even if there is no change made in the main.tf file. This can be seen by running terraform plan and it states “proxmox_vm_qemu.[vmname] must be replaced”

The only difference in my main.tf is that I’ve set my guest machine to use dhcp and added a MAC address argument so I can set a reservation on my DHCP server.

I’m pretty new to terraform so have to wonder is this normal for a provider to destroy infrastructure to rebuild it to make a simple change (memory allocation as an example)?

This terraform provider is not very robust. I have found it destroys for very simple changes.

Great post, thanks!

For me, what was missing was the role-creation for the proxmox-automation-user. This is very well documented here: https://registry.terraform.io/providers/Telmate/proxmox/latest/docs#creating-the-proxmox-user-and-role-for-terraform

This site/blog is what I have been looking for… A nice clean example of deploying infrastructure as code. Will definitely be following along with your progress.

[…] last post covered how to deploy virtual machines in Proxmox with Terraform. This post shows the template for deploying 4 Kubernetes virtual machines in Proxmox using […]

For me when I run apply it’s returns this error:

proxmox_vm_qemu.terraform-teste[0]: Still creating… [5m30s elapsed]

╷

│ Error: Plugin did not respond

│

│ with proxmox_vm_qemu.terraform-teste[0],

│ on main.tf line 20, in resource “proxmox_vm_qemu” “terraform-teste”:

│ 20: resource “proxmox_vm_qemu” “terraform-teste” {

│

│ The plugin encountered an error, and failed to respond to the plugin.(*GRPCProvider).ApplyResourceChange call. The plugin logs may contain more

│ details.

╵

Stack trace from the terraform-provider-proxmox_v2.8.0 plugin:

panic: interface conversion: interface {} is nil, not []interface {}

Can you help me?

There appears to be an error with how you have your network interface defined – can you copy + paste that portion of your .tf file?

It has something to do with Proxmox version 8.x.x

Change it to:

proxmox = {

source = “thegameprofi/proxmox”

version = “2.9.15”

I’m having problems when it comes to the apply phase. Terraform keeps erroring out with a “400 Parameter verification failed” My debug output is @

https://pastebin.com/JTVT9Fju

I have tried upgrading and downgrading the provider, and downgrading terraform to test the current versions, the versions you used, and even the version you said should have been used in the video.

Reading through the debug output, it is connecting to my server, reading a current list of containers and VMs, getting the next available ID, then failing at the cloning.

Any help would be appreciated.

It looks like it is complaining about your node name – what are you specifying for the proxmox host, as well as the new VM’s name? I actually ran into this same error. I had an underscore (_) in the name of the to-be-created VM which of course isn’t valid in hostnames. If you are specifying an IP address instead of your proxmox hostname, try the hostname instead.

That worked out. I was using the IP address and changed it to the node name. It spins the VMs up just fine. However, now when I change the count to equal 0 it doesn’t seem to pick up the fact that it needs to destroy a VM. plan says “No changes. Your infrastructure matches the configuration.” and apply does the same thing.

Austin, many thanks for this! I used you “cloud-init for Proxmox” blog with this one and have fully automated an RKE2 Rancher Management cluster.

I build K8S clusters with Terraform and SaltStack under EC2 and VMware, but Proxmox was giving a bit of an issue for me to get the network up so I could get the salt-minion installed. What you provided solved the last piece on my own network for full CICD.

You are welcome! I am glad you found it helpful!

In #1 ‘apt install terraform’ fails unless you do ‘apt update’ before.

Indeed it does. Updated. Thanks for pointing that out.

In #3 ‘which can be though of a a type’ should be ‘which can be thought of as a type’

I followed all the steps and I keep getting the following error when running terraform apply “Error: Vm ‘ub2004-tmplt’ not found”

any ideas on what I need to do?

Can you double check the permissions? There have been a few others with the same issue and it always turns out to be a permissions issue.

Thanks for detailed post!

I faced strange issue. Each time I run terraform plan it shows me changes in base image:

“`

~ disk {

~ size = “2252M” -> “30G”

~ type = “scsi” -> “virtio”

# (15 unchanged attributes hidden)

}

“`

Looks like it compares base VM disk of type scsi with additional virtio disk 30G size.

Did you face such issue?

also, official documentation for provider has weird description for slot parameter:

slot int _(not sure what this is for, seems to be deprecated, do not

https://registry.terraform.io/providers/Telmate/proxmox/latest/docs/resources/vm_qemu

Yes, the cloud-init image needs to be expanded. Guessing you set the size to 30G?

Thanks for your post. I may be found answer on your question –

Do I know what the minimum required permissions are to do so? Nope.

pveum role add terraform-role -privs “VM.Allocate VM.Clone VM.Config.CDROM VM.Config.CPU VM.Config.Cloudinit VM.Config.Disk VM.Config.HWType VM.Config.Memory VM.Config.Network VM.Config.Options VM.Monitor VM.Audit VM.PowerMgmt Datastore.AllocateSpace Datastore.Audit”

Source:

https://codingpackets.com/blog/proxmox-provision-guests-with-terraform/

Hi, for some reason terraform can not find the ubuntu cloud init template, while i’ve used your how to for creating an cloud-init template.

proxmox_vm_qemu.test_server[0]: Creating…

╷

│ Error: Vm ‘ubuntu-2004-cloudinit-template’ not found

│

│ with proxmox_vm_qemu.test_server[0],

│ on main.tf line 24, in resource “proxmox_vm_qemu” “test_server”:

│ 24: resource “proxmox_vm_qemu” “test_server” {

Is there something i”ve missed?

#sudo qm create 9000 –name “ubuntu-2004-cloudinit-template” –memory 1024 –cores 2 –net0 virtio,bridge=vmbr0 -> is the command ive used to created the server. Afterwards converted into a template

nvm, found the issue. Forgot the privelege seperation uncheck the checkbox while creating the token

bonjour,

svp comment créer des nouvelles VM dans le même fichier main.tf sans détruire les anciennes?

merci

Hi, I’m following your tutorial.

I have an error, my VM just not boot, I look in the console and it says me no booteable device found. So idk what is going on here. I made the template and the process just like you only changing the disk type to mines. But it didn’t work.

If you are running into this in 2023, there was an update to the provider and proxmox 8.x that require using a new disk format to function. As well as a new required cloudinit variable for the cdrom storage. Provided both that fixed this for me below.

cloudinit_cdrom_storage = “dell-flash”

disks {

scsi {

scsi0 {

disk {

size = 10

storage = “nvme” # or whatever storage

}

}

}

}

Thanks, yes I did go through the exercise again recently and updated my notes but haven’t got around to posting yet. Lots of breaking changes.

[…] is another post that was inspired by Austin at AustinsNerdyThings.com … Thanks […]

Question: I am having an issue that it always give me a dhcp address.

Have you seen this before?

I have not – I always specify what I want the IP address to be so it just uses the predefined static IPs.

can I use another location apart from the local-lvm?

Yes, you can use whatever storage you want. I use local-zfs for example.

Thank you!!!!! Excelent

Hey!

EOF reading SSH Keys is not working for me.

The code i copied from here:

# sshkeys set using variables. the variable contains the text of the key.

sshkeys = <<EOF

${var.ssh_key}

EOF

The error i get:

2022-12-09T07:18:00.491+0100 [DEBUG] provider.stdio: received EOF, stopping recv loop: err="rpc error: code = Unavailable desc = error reading from server: EOF"

Some ideas?

You also have the variables file in place and present with ssh_key value?

Hi and thanks a lot for youк blog.

I get this error with ‘terraform plan’:

Planning failed. Terraform encountered an error while generating this plan.

╷

│ Error: user does not exist or has insufficient permissions on proxmox: susumanin@pam!new_token_id

│

│ with provider[“registry.terraform.io/telmate/proxmox”],

│ on main.tf line 10, in provider “proxmox”:

│ 10: provider “proxmox” {

│

I made user in Proxmox as ypu describe.

I also tried to use root instead, but still get the same error.

Any ideas how to fix this?

Thanks a lot!

Is it OK to make installation and run terraform command (init, plan…) from one user (e.g. root), and use another user/API token in main.tf?

I get the same error even after run this commands:

pveum role add terraform-role -privs “VM.Allocate VM.Clone VM.Config.CDROM VM.Config.CPU VM.Config.Cloudinit VM.Config.Disk VM.Config.HWType VM.Config.Memory VM.Config.Network VM.Config.Options VM.Monitor VM.Audit VM.PowerMgmt Datastore.AllocateSpace Datastore.Audit”

pveum user add terraform@pve

pveum aclmod / -user terraform@pve -role terraform-role

pveum user token add terraform@pve terraform-token –privsep=0

and cnahge main.tf to this new user.

Didn’t try it yet, but it seems like it is the solution

https://old.reddit.com/r/Proxmox/comments/11a6lkp/error_when_using_terraform_plan_with_proxmox/j9uf7lk/

Hello, I followed your guide, and have a proxmox_vm_qemu resource which is very similar to yours. However, I keep running into the following error when executing the “terraform apply”.

TASK ERROR: can’t lock file ‘/var/lock/qemu-server/lock-100.conf’ – got timeout

This error seems centered around the disk {} variable, but I haven’t found a workaround. Also, it doesn’t always happen, just most of the times.

Somehow your proxmox host has vmid 100 in a locked state. This can happen for a variety of reasons (editing config file, attempting to startup/shutdown, and a few others). It almost always works to log into the proxmox host and manually delete that file.

How do I stop destroying existing vm. When I want to provision 2nd, 3rd etc?

It always destroys my previously created.

I am also facing same issue.

It destroys previously createdd vm for small changes i made.

Even when i apply terraform plan without any changes it destroys my vm .

Did you find any solution to this problem?

[…] o OpenTofu, nem ainda o terem experimentado no vosso homelab com integrações com o libvirt ou proxmox, façam a vocês próprios um favor e experimentem. É algo que definitivamente vale a pena […]

I have a problem whereby when attempting to clone the template to create a VM terraform apply fails to start the VM because the VM is already started, and therefore the state is left tainted. Have you ever experienced this behavour?

Thanks for the tutorial! Really love it because it made me help understand A LOT. Keep up the good work!

Only thing is that I am running into

│ Error: 400 Parameter verification failed.

│

│ with proxmox_vm_qemu.game-server[0],

│ on main.tf line 22, in resource “proxmox_vm_qemu” “game-server”:

│ 22: resource “proxmox_vm_qemu” “game-server” {

after trying to apply. Spent three hours trying to find what the problem I but by now I am pretty desperate. any of you code kids hat the same problem?

resource “proxmox_vm_qemu” “game-server” {

count = 1

name = “test-vm-${count.index + 1}”

target_node = var.proxmox_host

clone = var.template_name

agent = 1

os_type = “cloud-init”

cores = 2

sockets = 1

memory = 4096

scsihw = “virtio-scsi-pci”

bootdisk = “scsi0”

cpu_type = “host”

disk {

slot = “scsi0”

size = “10G”

type = “disk”

storage = “local-zfs”

iothread = true

}

network {

id = 0

model = “virtio”

bridge = “vmbr0”

}

lifecycle {

ignore_changes = [

network,

]

}

(ip config is omitted)

I actually tried to get this stuff updated for 2025 a few days ago and ran into the same issue. There is a debug mode where Terraform spits out a lot more info about what’s going on. If you are using a newer provider version, a lot has changed. I haven’t got it all nailed down yet.

Nice guide, I have been working a bit on terraform for the past few weeks and I have now created module with loop (to clone and create multiple vm:s add ssh keys, password, static ip), check it out 🙂

https://github.com/dinodem/terraform-proxmox