Introduction / Background

This post has been a long time coming. I apologize for how long it’s taken. I noticed that many other blogs left off at a similar position as I did. Get the VMs created then…. nothing. Creating a Kubernetes cluster locally is a much cheaper (read: basically free) option to learn how Kubes works compared to a cloud-hosted solution or a full-blown Kubernetes engine/solution, such as AWS Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS), or Google Kubernetes Engine (GKE).

Anyways, I finally had some time to complete the tutorial series so here we are. Since the last post, my wife and I are now expecting our 2nd kid, I put up a new solar panel array, built our 1st kid a new bed, messed around with MacOS Monterey on Proxmox, built garden boxes, and a bunch of other stuff. Life happens. So without much more delay let’s jump back in.

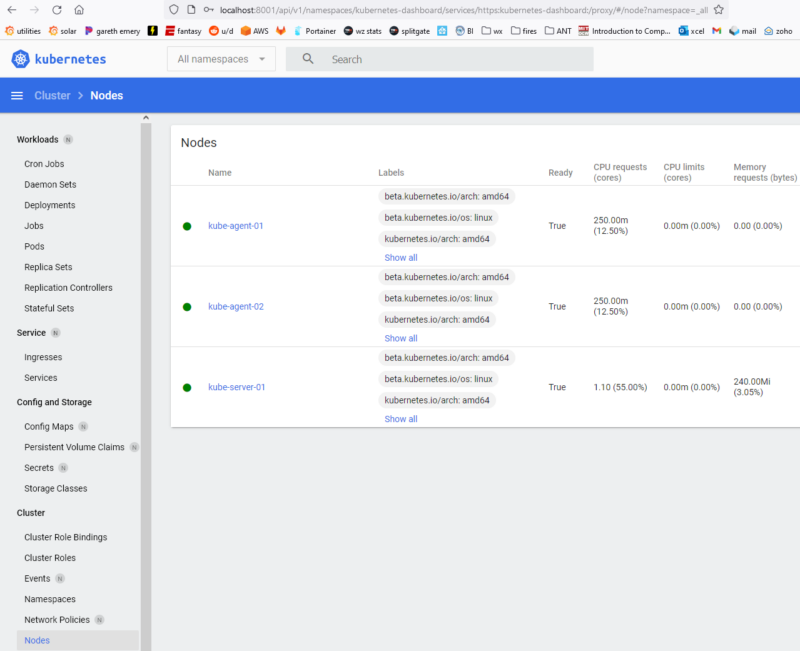

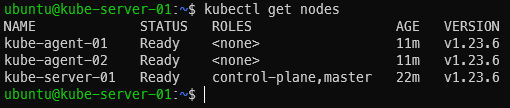

Here’s a screenshot of the end state Kubernetes Dashboard showing our nodes:

Current State

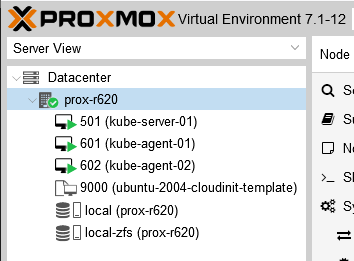

If you’ve followed the blog series so far, you should have four VMs in your Proxmox cluster ready to go with SSH keys set, the hard drive expanded, and the right amount of vCPUs and memory allocated. If you don’t have those ready to go, take a step back (Deploying Kubernetes VMs in Proxmox with Terraform) and get caught up. We’re not going to use the storage VM. Some guides I followed had one but I haven’t found a need for it yet so we’ll skip it.

Ansible

What is Ansible

If you ask DuckDuckGo to define ansible, it will tell you the following: “A hypothetical device that enables users to communicate instantaneously across great distances; that is, a faster-than-light communication device.”

In our case, it is “a open-source software provisioning, configuration management, and application-deployment tool enabling infrastructure as code.”

We will thus be using Ansible to run the initial Kubernetes set up steps on every machine, initialize the cluster on the master, and join the cluster on the workers/agents.

Initial Ansible Housekeeping

First we need to specify some variables similar to how we did it with Terraform. Create a file in your working directory called ansible-vars.yml and put the following into it:

# specifying a CIDR for our cluster to use. # can be basically any private range except for ranges already in use. # apparently it isn't too hard to run out of IPs in a /24, so we're using a /22 pod_cidr: "10.16.0.0/22" # this defines what the join command filename will be join_command_location: "join_command.out" # setting the home directory for retreiving, saving, and executing files home_dir: "/home/ubuntu"

Equally as important (and potentially a better starting point than the variables) is defining the hosts. In ansible-hosts.txt:

# this is a basic file putting different hosts into categories # used by ansible to determine which actions to run on which hosts [all] 10.98.1.41 10.98.1.51 10.98.1.52 [kube_server] 10.98.1.41 [kube_agents] 10.98.1.51 10.98.1.52 [kube_storage] #10.98.1.61

Checking Ansible can communicated with our hosts

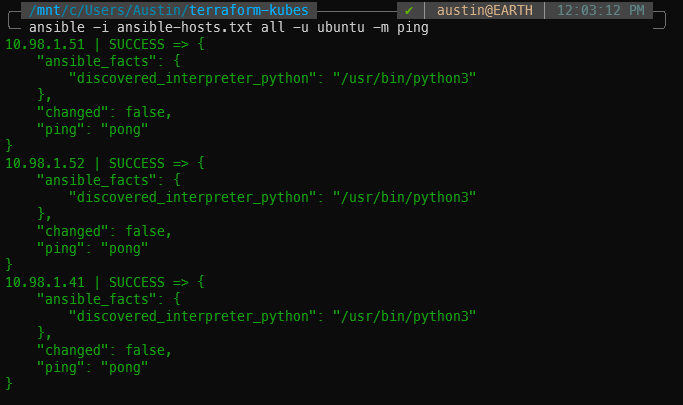

Let’s pause here and make sure Ansible can communicate with our VMs. We will use a simple built-in module named ‘ping’ to do so. The below command broken down:

- -i ansible-hosts.txt – use the ansible-hosts.txt file

- all – run the command against the [all] block from the ansible-hosts.txt file

- -u ubuntu – log in with user ubuntu (since that’s what we set up with the Ubuntu 20.04 Cloud Init template). if you don’t use -u [user], it will use your currently logged in user to attempt to SSH.

- -m ping – run the ping module

ansible -i ansible-hosts.txt all -u ubuntu -m ping

If all goes well, you will receive “ping”: “pong” for each of the VMs you have listed in the [all] block of the ansible-hosts.txt file.

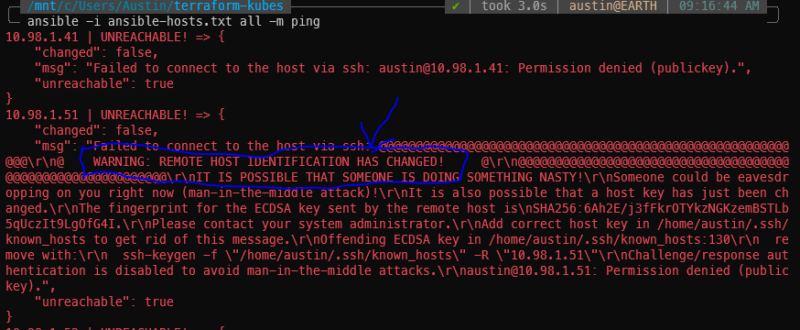

Potential SSH errors

If you’ve previously SSH’d to these IPs and have subsequently destroyed/re-created them, you will get scary sounding SSH errors about remote host identification has changed. Run the suggested ssh-keygen -f command for each of the IPs to fix it.

You might also have to SSH into each of the hosts to accept the host key. I’ve done this whole procedure a couple times so I don’t recall what will pop up first attempt.

ssh-keygen -f "/home/<username_here>/.ssh/known_hosts" -R "10.98.1.41" ssh-keygen -f "/home/<username_here>/.ssh/known_hosts" -R "10.98.1.51" ssh-keygen -f "/home/<username_here>/.ssh/known_hosts" -R "10.98.1.52" ssh-keygen -f "/home/<username_here>/.ssh/known_hosts" -R "10.98.1.61"

Installing Kubernetes dependencies with Ansible

Then we need a script to install the dependencies and the Kubernetes utilities themselves. This script does quite a few things. Gets apt ready to install things, adding the Docker & Kubernetes signing key, installing Docker and Kubernetes, disabling swap, and adding the ubuntu user to the Docker group.

ansible-install-kubernetes-dependencies.yml:

# https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/

# https://github.com/virtualelephant/vsphere-kubernetes/blob/master/ansible/cilium-install.yml#L57

# ansible .yml files define what tasks/operations to run

---

- hosts: all # run on the "all" hosts category from ansible-hosts.txt

# become means be superuser

become: true

remote_user: ubuntu

tasks:

- name: Install packages that allow apt to be used over HTTPS

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

- name: Add an apt signing key for Docker

apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add apt repository for stable version

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu xenial stable

state: present

- name: Install docker and its dependecies

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- docker-ce

- docker-ce-cli

- containerd.io

- name: verify docker installed, enabled, and started

service:

name: docker

state: started

enabled: yes

- name: Remove swapfile from /etc/fstab

mount:

name: "{{ item }}"

fstype: swap

state: absent

with_items:

- swap

- none

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb >= 0

- name: Add an apt signing key for Kubernetes

apt_key:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

state: present

- name: Adding apt repository for Kubernetes

apt_repository:

repo: deb https://apt.kubernetes.io/ kubernetes-xenial main

state: present

filename: kubernetes.list

- name: Install Kubernetes binaries

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

# it is usually recommended to specify which version you want to install

- kubelet=1.23.6-00

- kubeadm=1.23.6-00

- kubectl=1.23.6-00

- name: hold kubernetes binary versions (prevent from being updated)

dpkg_selections:

name: "{{ item }}"

selection: hold

loop:

- kubelet

- kubeadm

- kubectl

# this has to do with nodes having different internal/external/mgmt IPs

# {{ node_ip }} comes from vagrant, which I'm not using yet

# - name: Configure node ip -

# lineinfile:

# path: /etc/default/kubelet

# line: KUBELET_EXTRA_ARGS=--node-ip={{ node_ip }}

- name: Restart kubelet

service:

name: kubelet

daemon_reload: yes

state: restarted

- name: add ubuntu user to docker

user:

name: ubuntu

group: docker

- name: reboot to apply swap disable

reboot:

reboot_timeout: 180 #allow 3 minutes for reboot to happen

With our fresh VMs straight outta Terraform, let’s now run the Ansible script to install the dependencies.

Ansible command to run the Kubernetes dependency playbook (pretty straight-forward: the -i is to input the hosts file, then the next argument is the playbook file itself):

ansible-playbook -i ansible-hosts.txt ansible-install-kubernetes-dependencies.yml

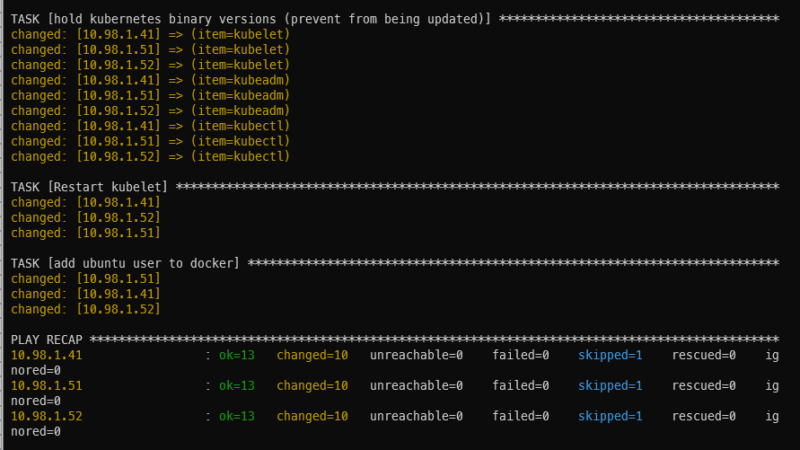

It’ll take a bit of time to run (1m26s in my case). If all goes well, you will be presented with a summary screen (called PLAY RECAP) showing some items in green with status ok and some items in orange with status changed. I got 13 ok’s, 10 changed’s, and 1 skipped.

Initialize the Kubernetes cluster on the master

With the dependencies installed, we can now proceed to initialize the Kubernetes cluster itself on the server/master machine. This script sets docker to use systemd cgroups driver (don’t recall what the alternative is at the moment but this was the easiest of the alternatives), initializes the cluster, copies the cluster files to the ubuntu user’s home directory, installs Calico networking plugin, and the standard Kubernetes dashboard.

ansible-init-cluster.yml:

- hosts: kube_server

become: true

remote_user: ubuntu

vars_files:

- ansible-vars.yml

tasks:

- name: set docker to use systemd cgroups driver

copy:

dest: "/etc/docker/daemon.json"

content: |

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- name: restart docker

service:

name: docker

state: restarted

- name: Initialize Kubernetes cluster

command: "kubeadm init --pod-network-cidr {{ pod_cidr }}"

args:

creates: /etc/kubernetes/admin.conf # skip this task if the file already exists

register: kube_init

- name: show kube init info

debug:

var: kube_init

- name: Create .kube directory in user home

file:

path: "{{ home_dir }}/.kube"

state: directory

owner: 1000

group: 1000

- name: Configure .kube/config files in user home

copy:

src: /etc/kubernetes/admin.conf

dest: "{{ home_dir }}/.kube/config"

remote_src: yes

owner: 1000

group: 1000

- name: restart kubelet for config changes

service:

name: kubelet

state: restarted

- name: get calico networking

get_url:

url: https://projectcalico.docs.tigera.io/manifests/calico.yaml

dest: "{{ home_dir }}/calico.yaml"

- name: apply calico networking

become: no

command: kubectl apply -f "{{ home_dir }}/calico.yaml"

- name: get dashboard

get_url:

url: https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml

dest: "{{ home_dir }}/dashboard.yaml"

- name: apply dashboard

become: no

command: kubectl apply -f "{{ home_dir }}/dashboard.yaml"

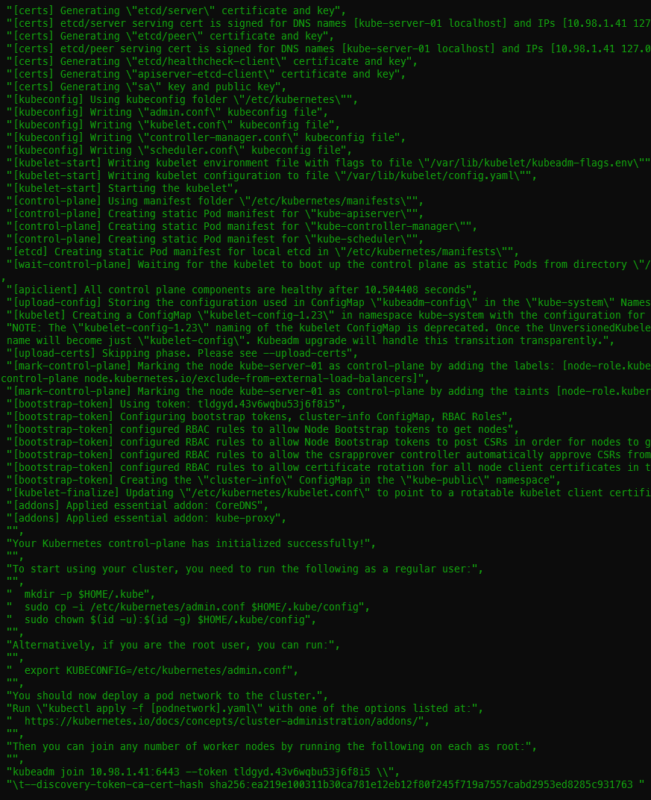

Initializing the cluster took 53s on my machine. One of the first tasks is to download the images which takes the majority of the duration. You should get 13 ok and 10 changed with the init. I had two extra user check tasks because I was fighting some issues with applying the Calico networking.

ansible-playbook -i ansible-hosts.txt ansible-init-cluster.yml

Getting the join command and joining worker nodes

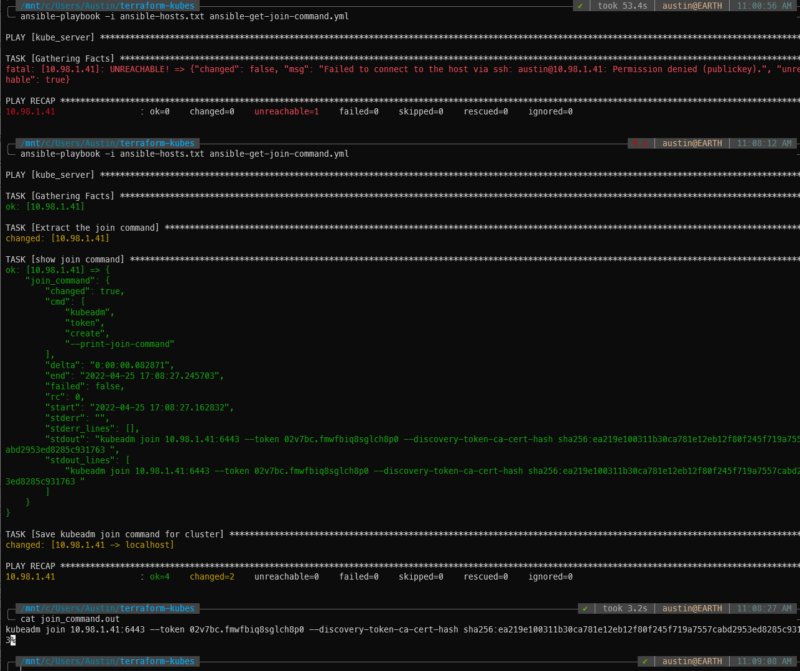

With the master up and running, we need to retrieve the join command. I chose to save the command locally and read the file in a subsequent Ansible playbook. This could certainly be combined into a single playbook.

ansible-get-join-command.yaml –

- hosts: kube_server

become: false

remote_user: ubuntu

vars_files:

- ansible-vars.yml

tasks:

- name: Extract the join command

become: true

command: "kubeadm token create --print-join-command"

register: join_command

- name: show join command

debug:

var: join_command

- name: Save kubeadm join command for cluster

local_action: copy content={{ join_command.stdout_lines | last | trim }} dest={{ join_command_location }} # defaults to your local cwd/join_command.out

And for the command:

ansible-playbook -i ansible-hosts.txt ansible-get-join-command.yml

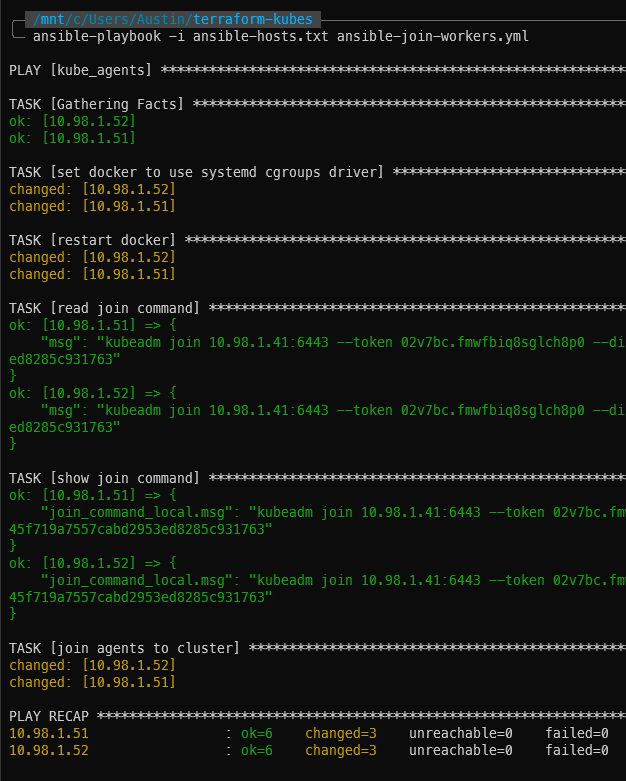

Now to join the workers/agents, our Ansible playbook will read that join_command.out file and use it to join the cluster.

ansible-join-workers.yml –

- hosts: kube_agents

become: true

remote_user: ubuntu

vars_files:

- ansible-vars.yml

tasks:

- name: set docker to use systemd cgroups driver

copy:

dest: "/etc/docker/daemon.json"

content: |

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

- name: restart docker

service:

name: docker

state: restarted

- name: read join command

debug: msg={{ lookup('file', join_command_location) }}

register: join_command_local

- name: show join command

debug:

var: join_command_local.msg

- name: join agents to cluster

command: "{{ join_command_local.msg }}"

And to actually join:

ansible-playbook -i ansible-hosts.txt ansible-join-workers.yml

With the two worker nodes/agents joined up to the cluster, you now have a full on Kubernetes cluster up and running! Wait a few minutes, then log into the server and run kubectl get nodes to verify they are present and active (status = Ready):

kubectl get nodes

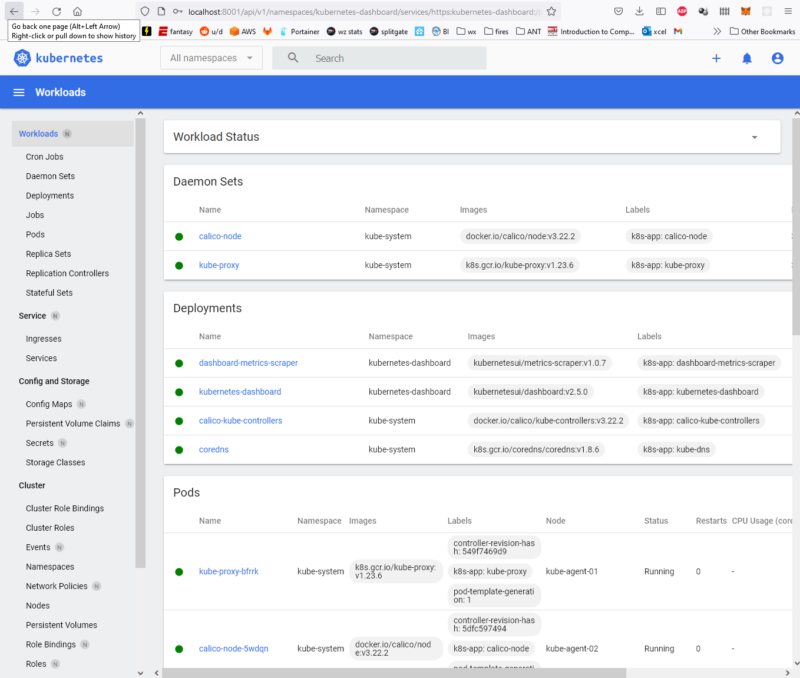

Kubernetes Dashboard

Everyone likes a dashboard. Kubernetes has a good one for poking/prodding around. It appears to basically be a visual representation of most (all?) of the “get information” types of command you can run with kubectl (kubectl get nodes, get pods, describe stuff, etc.).

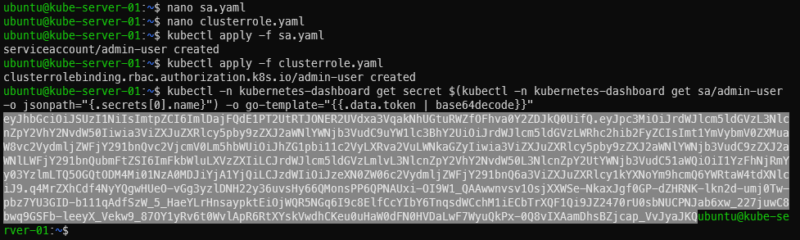

The dashboard was installed with the cluster init script but we still need to create a service account and cluster role binding for the dashboard. These steps are from https://github.com/kubernetes/dashboard/blob/master/docs/user/access-control/creating-sample-user.md. NOTE: the docs state it is not recommended to give admin privileges to this service account. I’m still figuring out Kubernetes privileges so I’m going to proceed anyways.

Dashboard user/role creation

On the master machine, create a file called sa.yaml with the following contents:

apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard

And another file called clusterrole.yaml:

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

Apply both, then get the token to be used for logging in. The last command will spit out a long string. Copy it starting at ‘ey’ and ending before the username (ubuntu). In the screenshot I have highlighted which part is the token

kubectl apply -f sa.yaml

kubectl apply -f clusterrole.yaml

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

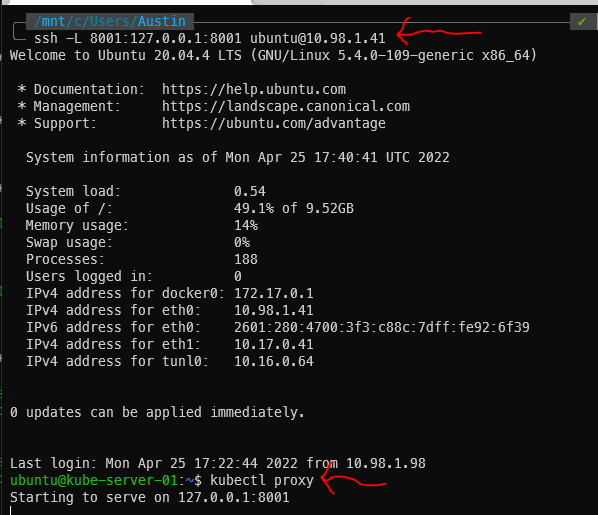

SSH Tunnel & kubectl proxy

At this point, the dashboard has been running for a while. We just can’t get to it yet. There are two distinct steps that need to happen. The first is to create a SSH tunnel between your local machine and a machine in the cluster (we will be using the master). Then, from within that SSH session, we will run kubectl proxy to expose the web services.

SSH command – the master’s IP is 10.98.1.41 in this example:

ssh -L 8001:127.0.0.1:8001 [email protected]

The above command will open what appears to be a standard SSH session but the tunnel is running as well. Now execute kubectl proxy:

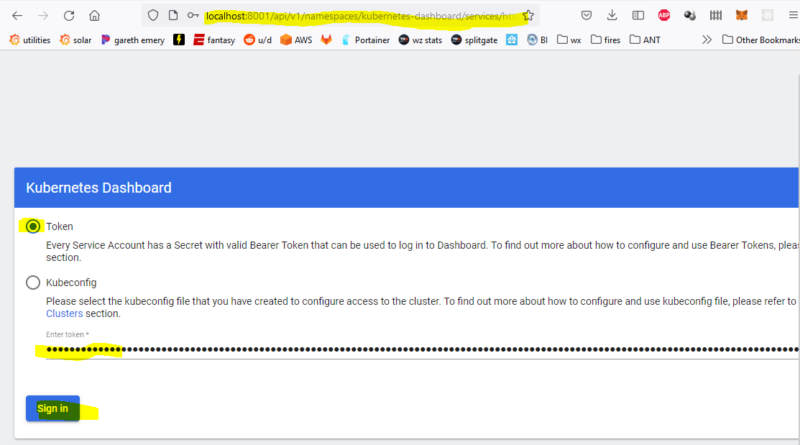

The Kubernetes Dashboard

At this point, you should be able to navigate to the dashboard page from a web browser on your local machine (http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/) and you’ll be prompted for a log in. Make sure the token radio button is selected and paste in that long token from earlier. It expires relatively quickly (couple hours I think) so be ready to run the token retrieval command again.

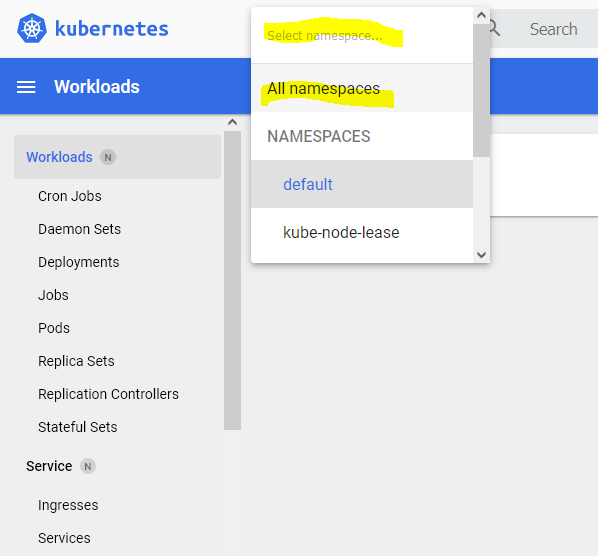

The default view is for the “default” namespace which has nothing in it at this point. Change it to All namespaces for more details:

From here you can see information about everything in the cluster:

Conclusion

With this last post, we have concluded the journey from creating a Ubuntu cloud-init image in Proxmox, using Terraform to deploy Kubernetes VMs in Proxmox, all the way through deploying an actual Kubernetes cluster in Proxmox using Ansible. Hope you found this useful!

Video link coming soon.

Discussion

For discussion, either leave a comment here or if you’re a Reddit user, head on over to https://www.reddit.com/r/austinsnerdythings/comments/ubsk1i/i_made_a_tutorial_showing_how_to_deploy_a/.

References

https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/

15 replies on “Deploying a Kubernetes Cluster within Proxmox using Ansible”

Wonderful series !

Thanks for taking the time to make this step by step tutorial for complete noobs like me.

Being browsing the web these last weeks and only picking up half tutorial / process / explanation for each part (ansible, proxmox … ) but not a full process from vm to running kubernetes UI.

Haven’t tried it yet but soon … God willing … and congrats for baby and other achievements 🙂

Thanks so much for this series.

Really helped me.

I am getting the following errors:

ERROR! couldn’t resolve module/action ‘mount’. This often indicates a misspelling, missing collection, or incorrect module path.

The error appears to be in ‘/ansible-install-kubernetes-dependencies.yml’: line 52, column 5, but may

be elsewhere in the file depending on the exact syntax problem.

The offending line appears to be:

– name: Remove swapfile from /etc/fstab

^ here

I had to comment out that section for it to completed

Can you please post your full playbook?

Awesome guide series. I was struggling with this stuff for days!

In file ansible-init-cluster.yml line 53 (https://projectcalico.docs.tigera.io/manifests/calico.yaml) no longer exists. What is a suitable replacement for this manifest?

Looks like you can still get it from github https://github.com/projectcalico/calico/releases

it can also be gotten here https://projectcalico.docs.tigera.io/v3.18/manifests/calico.yaml

how i copy the same link into ansible config ?

I created an ansible file that copies the sa.yml and clusterrole.yml to the kube server. it also gets the cluster nodes, applies the sa.yml to create the service account, applies the clusterrole.yml to create Cluster Role Binding, and gets the decoded kubernetes-dashboard token to log into the dashboard.

https://github.com/mredsolomon/kubernetes-ansible/blob/main/copy-file-run-kube-commands.yml

hello Mr austin i have problem with applying the Calico networking

the error says :requested fail HTTP error 404 do you have any clew how to fix it is the url is not right ?

You have to change the calico url in the ansible-init-cluster.yml file to https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/calico.yaml

again hello mr Austin i m runing 1 master node and 2 agent in proxmox with 8G ram can i run my cluster with this amount or kubernets need more ressources and thank you again

Thank you for this awesome Tutorial it really helped me a lot.

Small Remark:

The Calico dependency has changed to

https://raw.githubusercontent.com/projectcalico/calico/v3.25.1/manifests/calico.yaml

See also the Docs: https://docs.tigera.io/calico/latest/getting-started/kubernetes/self-managed-onprem/onpremises

Thanks for the series explaining kubernetes deployment with ansible. I have a reached a stage of calico networking. While applying calico, an error gets thrown (probably api server is not avilable). Please suggest if there a work around. thanks. TASK [apply calico networking] ************************************************************************************************************************************

fatal: [10.10.64.160]: FAILED! => {“changed”: true, “cmd”: [“kubectl”, “apply”, “-f”, “/home/ubuntu/calico.yaml”], “delta”: “0:00:00.071244”, “end”: “2023-07-17 15:19:37.334962”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2023-07-17 15:19:37.263718”, “stderr”: “The connection to the server localhost:8080 was refused – did you specify the right host or port?”, “stderr_lines”: [“The connection to the server localhost:8080 was refused – did you specify the right host or port?”], “stdout”: “”, “stdout_lines”: []}